davide sorasio

-

Posts

94 -

Joined

-

Last visited

Posts posted by davide sorasio

-

-

Hi guys,

I was wondering if anybody could give me a scientific exolanation of how focal lenght doublers work and the reason why there is a 2 stops loss.

Thanks in advance

-

Hello everyone, maybe this is a silly question but it has been in my head for a while.

Once I have a close and far focus chart for a certain focal lenght, could it be used for the same focal lenght of another set of lenses that have the same T-stop when wide open? For example, shooting at the same settings on the same camera with a 75mm Master prime using a diopter +1 would give me the same far and close focus if switching to another lens with the same wide open T stop?

and if not, which are the factors that influence close and far focus on diopters except from the diopter's gradation?

Thanks in advance

-

HI everybody, I've never worked with probe lenses and I was wondering if anybody could give me some infos, like how do they work, in what they differ from normal lenses and if the focus mechanism is the same etc etc.

Thanks so much in advance!

-

Hi everybody, I'm doing a check out tomorrow and we are checking two 24mm lenses, one of which is detuned, what does that mean?

Thank you in advance for the help!

-

Hello everybody, next week it's going to be my first time working with Cinetape and I'd like to know if there's any tips you guys could give me to completely take advantage of it (like adjusting the height according to the kind of shot etc etc). Thank you

-

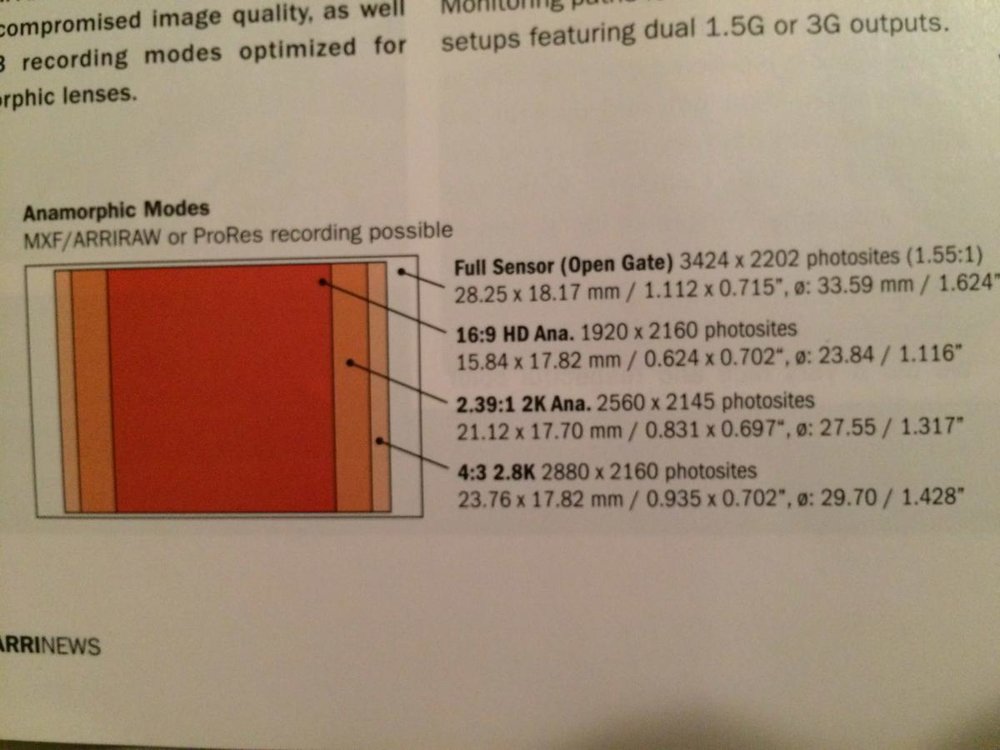

Thanks a lot David, this helps me a lot! But I still have some doubts. If you look at the attached image, taken from the Arri Nab 2016 Issue, where they talk about the SUP 4.0 and possibility of shooting open gate and anamorphic on the sensor, I cannot understand why 16:9 is not wider than 4:3!

16:9 means 1:1.78, so the height is 1 and the width is 1,78 times the height. 4:3 means 1:1.33, so it' should be more similar to a "square", but if you look at the image attached it's kind of the opposite, having 4:3 wider than 16:9.

What am I not understanding?? -

What's the difference/advantage between setting knob limits or motor limits on a wireless follow focus? Maybe it's a silly question but I cannot figure out the difference, the WCU4 gives u the option to set motor limits or knob limits.

-

Hi everybody, I'm looking for more explanations on shooting open gate. Arri's website says that shooting arri raw open gate gives you a frame size of approximately 3.4 K with a 1: 1.55 aspect ratio. Why is that an important feature? To gain more pixels by recording on a bigegr sensor?Is this mode especially convenient with anamorphic? and is that aspect ratio the "final" aspect ratio of the image?

Also, reading the latest American Cinematographer the DP talking about previously shooting open gate arri raw with anamorphic and then shooting on spherical lenses and not using the open gate mode said" we wanted to get really close to the actor, so we used spherical lenses and only part of the sensor so it was easier to shoot and be more flexible". I do not see the relationship between shooting/not shooting open gate and the possibility to get closer to a subject.

-

Hello, browising some monitor manifacturers' website I kept reading in the specs, "contrast ratio" 1000:1 ( others were 750:1). What does that mean?

-

Most monitors are 1.5G 8 bit 422 and they can't accept 3G 10 bit 444.

Having setup many post production facilities before, unless a monitor is advertised to accept 3G 444, it most likely doesn't. It's a totally different class of electronics necessary.

Thanks a lot Tyler! One last thing, from my previous post, how do you know that 10bit is 444 3G? Is there anything like 422 10 bit?

-

Hello everybody, weird question here, I hope it's not too confusing. I'm using a Small HD AC7 wich is a 8 bit monitor. I am sending to it a 422 1.5G signal from the Arri Amira, and everything is kk, but I could not see an image when trying to send 444 3G. I understand the basis of color sampling, what i cannot understand is the passage from knowing my bitdepth to understanding the data rate and color sampling a monitor is capable of handling. In simplier words, is there a way I could have known that having a 8bit monitor I could not send a 444 image through sdi?

-

I don't see why a forum poster is necessarily more reliable, you have to gather information from a variety of sources and look for confirmation, but basic stuff like what is component video and composite video is well-defined in hundreds of technical manuals and websites, so I don't see why someone here, unless they look stuff up first to confirm their facts as I often do -- and thus doing homework for a person who was too lazy to do that homework themselves -- is more accurate by default.

Asking people to define basic technical terms that you could look up yourself is no better than asking people what the capital of some country is, or who shot what movie, etc. If we are going to put in time and effort to answer questions on this site, we expect the original posters to do the same effort in looking stuff up.

If you need clarification or confirmation on some fact you found elsewhere, then just say so up front. If you looked up component and composite video and found some fact confusing or contradictory, then go ahead and ask. But don't show up on a forum and ask people to define basic terms. Why not ask "what's a lens?" "What is reversal film?" "What is an SSD?" Asking what the difference is between component and composite video is the same type of question. Unless you simply didn't understand what you read and need it explained to you more clearly and simply.

This is just one of my pet peeves and it goes back 18 years or more of answering questions online. Someone would ask for a definition, so I'd look it up on several sites just to confirm what I thought I already knew, and then I'd compile all that information into a forum post, sometimes citing sources... doing work that the original poster could have done.

I understand your point, and I apologize if this bothered or annoyed anybody else. I really appreciate the info I was able to get from this forum and all the people in it, especially from you David. I'll pay more attention in the future to go more in depth before asking something.

-

Davide, there are whole Wikipedia entries on these things, why don't you spend a little time looking this stuff up before you start asking for clarification here?

See:

I did. I do not completely trust wikipedia (it happened to me to read something and then finding here from other people that wikipedia was wrong). I trust more the experience of people here. To be honest I do not wanna learn something that is wrong and that is going to make me look like a fool. And for the last part of the topic in fact I feel like I'm asking for confirmation to see if I elaborated the info in the proper way. For example, about my last question about YPrPb wiki doesn't tell if it requires 3 diff cables or if that is a "y" cable with 3 different ends.

-

-

Component signal separates the luminance from chrominance. There are three ways to do this, one is carry the chrominance signal combined, which is S-Video. Another is to separate the Blue and Red signals which is what we refer to as YPbPr, which has 3 black and white signals which carry the chrominance in them. The final way is true RGB, which of course is one cable carrying Red, Green, Blue signal.

Everything is pretty clear until this point. My last doubt comes from S-Video. In this case how many cables are used? Is it one with the luminance and then the chrominance combined?

-

Hi everybody! One of my biggest fears on set is to fry camera accessories because of misunderstanding of voltage etc etc. I know that some accessories like TV logic need 12 volts while other like follow focus motors etc need 24 volts. What I do not understand is how do I know which voltahe is the correct for each accessories and what's the voltage of each power port (If I'm not wrong RS ports are 24v while Dtap is 12-18). Is there any rule of thumbs or does anybody have a more defines explanations that goes beyond my knowledge of the topic? My understanding of electricity is pretty basic too.

Thank you in advance for the help!

-

You can use a TVLogic monitor that has a composite video input like the 5.6WP and 074W. The 5.6 uses RCA for composite input, so you would need to use a BNC cable with a female BNC to male RCA adapter. The 074W uses BNC for composite. But you can't loop out from there, the signal terminates at the monitor.

I don't think you can send composite video through the Teradek, just HD-SDI. So you would have to convert the composite output to HD-SDI first with an AJA or Blackmagic Analog to Digital converter box. If you are renting the Arricam Studio package from a large rental house, it may be possible to just get an HD-SDI video tap. That would be the simplest solution.

Next simplest would be to use an old school composite video wireless transmitter like the Modulus instead of the Teradek. Then either use composite video director's monitors, or modern HD-SDI director's monitors with an AJA converter box velcro'd to the back.

Thank you so much! We did exactly what you said on the shoot and it worked! I have another question (and I apologize for the silliness of it in advance), how could have we got a better quality image on the tv logic for the AC to pull focus on? Maybe by using the component inputs and split the signal in the 3 different components? Like for example having 3 BNCs (with the RCA adapter) each of them going into one of the Y,R and B inputs of the TV logic?

-

The luminance is the base signal, it's the "Y" cable of your composite system. With composite, the luminance gets a compressed chrominance subcarrier frequency attached to it. The color carrier is generally half of the scanning rate, this is why composite doesn't doesn't look nearly as good as component.

Composite was designed specifically for broadcast and almost all of the standard definition systems and video tape machines made, were based on a composite standard.

Component signal separates the luminance from chrominance. There are three ways to do this, one is carry the chrominance signal combined, which is S-Video. Another is to separate the Blue and Red signals which is what we refer to as YPbPr, which has 3 black and white signals which carry the chrominance in them. The final way is true RGB, which of course is one cable carrying Red, Green, Blue signal.

The most common NTSC/Consumer component signals are S-Video and YPbPr. RGB wasn't as widely used in broadcasting because most equipment was composite until the innovation of digital technology. When Serial Digital Interface (SDI) came around, most broadcasters switched over and analog as we know it, died on the vine very quickly.

As a side note, there were only two component analog tape formats; Betacam SP and it's Panasonic rival MKII.Both recorded component signal on to tape, exactly how it went into the machines with no muxing. So almost all of the other tape formats were composite. Some machines came with an S-Video output, but it was only on the higher resolution ones; LaserDisc, Hi-8, S-Video and Betamax Hi-Band.

Thank you so much, this is really useful information. My further question related to the topic comes from an experience I had on set last week. We were shooting on the Arricam Studio, so in order to have a working TV logic we had to use an RCA adapter to convert the HDSDI into an "analog signal". We got the adapter and were able to get a (really low quality) image on the TV logic by switching the input of the tv logic to composite and plugging the connector into the Luma input of the TV Logic. My question is, in order to get a better image for the AC on the TV logic would have been ipotetically possible to switch the TV logic input to component and use 3 different BNCs, each one going into the 3 different Green,Red, Blue inputs? Would that have made possible getting an image usable to pull focus on the TV logic?

-

Can anybody tell me the difference between a composite and a component signal? Are they both analog signals?

Thanks so much in advance

-

23.98 is only a "for broadcast" frame rate, so for a monitor output of a camera, it's not really going to make any difference on a film set.

Can you tell me more about this? Why is not going to make any difference?

-

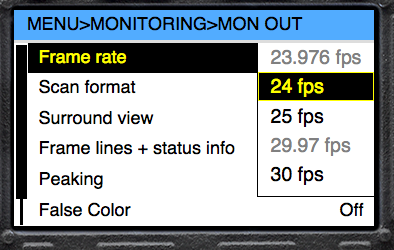

why 23.976 and not 24 fps? And when should I set the camera and the monitoring out option for the BNC for one or the other?

-

-

Hi everybody, I apologize in adavence if the question is silly but: I know that the bigger the sensor the shallower the depth of field is, but does that translate in digital related to the resolution of the camera?

To be clearer, on the same camera, for example an alexa super 35mm cmos sensor, shooting 3.2k or 2k or standard HD is it gonna effect the depth of field?

Thanks for the help!

-

Hi everybody, scrolling through the alexa menu I saw items that I'm not sure what the are.

- in the recording/rec out menu I see SCAN FORMAT, with the possibility of choosing between P or PSF. What is the difference between them?

- in the same menu I also see HD-SDI FORMAT with the possibility of choosing between 422 1.5 SL/DL, 422 3G SL, 444 1.5G DL and 444 3G DL/SL. While I know that 422/444 have to do with the color sampling I have no idea what 1.5G and 3G mean, and same with SL/DL

- in the MONITORING/MON OUT menu I see scan format and the options are P or PSF? Is this the exact thing I've ask in question number 1?And why in the frame rate option in this menu it doesn't let me choose 23.976 but only 24, 25 and 30 fps?

Thank you in advance for the help!

Confusion about lenses/sensor size and angle of view. Larger format lens on smaller format sensor.

in General Discussion

Posted · Edited by davide sorasio

Hi everybody,

I was reading a previous issues of "Film and Digital Times" and I came across a portion in wich the editor ask one of the lens engeneers behind the signature primes:

-Q: "What happens with a large format lens like a signature prime on a Super 35mm camera like an Alexa SXT?"

-A: "The LF lens looks the same as the S35 lens. An 18mm LF lens on the S35 camera looks just like an 18mm S35 lens on the S35 camera"

Maybe I lack of knowledge, but I thought we were going to get a narrower field of view. So following the same logic for example if I put a 50mmn Master prime on an Arri 416 plus my lens would give me the same FOV as, for example, a 50mm Ultra 16 prime.

I'm really confused about what happens when we put a bigger format lens on a smaller format sensor.

Thanks in advance!