-

Posts

93 -

Joined

-

Last visited

Posts posted by Stephen Baldassarre

-

-

This was from a 16mm shoot, many many years ago. I think the stock was 7205, 24fps, T2, no filters or artificial lighting.

The first movie I directed had a fireplace scene, indoors. I shot it on 200 ISO at F2 as well, though it was a smaller fire and the subject was closer to it. I'm afraid I don't have a screen shot at the moment. You can put silver reflectors nearby to add some fill.

-

1

1

-

-

Just a quick note on the poor rendition of red on film. Blue is the first layer, followed by green, then red. Not only is red slightly farther away from the focal point of the lens, the light has scattered through the other emulsion & filter layers. That's one of the reasons photochemical composite shots were traditionally done with blue screen. Skin tone also contains very little blue but that's beyond this topic. I also suspect that red is more susceptible to light reflecting off of the antihalation coating. It's dark, but not 100% absorptive, and any light bouncing off of said coating has to pass through the color filters a second time to get to their respective emulsion layers. The red layer essentially has direct line of sight with the back of the film.

This is a good look into the structure of film.

http://www2.optics.rochester.edu/workgroups/cml/opt307/spr10/shu-wei/

Mr. Mullen, those screen shots look fantastic! They give the impression of red, but pure red doesn't exactly exist here. Even deep red gels still allow a much broader spectrum than red LEDs. You also have splashes of full color, which gives a reference point for the eye to maintain its own white balance. It's a stark contrast from many modern movies where entire scenes are digitally tinted orange, blue etc. Newer movies feel very "alien" by comparison, mostly because a lot of graders seem to screw with the color without reason. I say this immediately after throwing out a LUT given to me alongside some raw video, shot with fairly heavily saturated red & magenta colored lights, in favor of a simple log conversion with a slight manual tweak done on my end. The guy shot some amazing stuff but his grade made it look just plain weird.

-

Sure thing. Like I said, not exactly what I wanted but close enough for this project.

-

I'm familiar with the blackened foil. It IS good stuff, though a bit dangerous to handle. I wound up going back to Hobby Lobby again and this time, found something close enough for my purposes.

Thanks!

-

Don't be afraid to look on the used market for some 1K Fresnels. I'm sure you can get them super cheap or free. I'd offer you some of mine but the shipping cost would probably be more than the fixtures are worth. :D

-

When shooting indoors, I try to always shoot around F4, unless I'm going for a special look. I usually don't have the budget to do much reinforcement of lighting outdoors but with a good ND filter, can generally get between F4 and F8.

I seem to remember reading that Josh Becker generally lights everything to F5.6. It's not as hard as it sounds unless you're doing available light shooting.

-

The main problem with most LED fixtures, even fairly high-dollar video lights, is there's very little energy between deep blue and green, virtually no cyan and little in the deep red range. What I usually do is use LEDs for my fill lights and then add in a tungsten key, works pretty well.

-

1

1

-

-

Hello, it's been a while since I've been here but I have a very specific material need I can't find anywhere. When I worked in television, years ago, we had large sheets of what's basically like VERY heavy, stiff, construction paper. This stuff would stay flat but if you intentionally bent it, it would stay in place and held up to high temps from being clipped to barn doors on 2K & 5K Fresnels. If you tore it, you can see that it's black through and through, not just on the surface like normal construction paper. I really need some of this stuff and none of the lighting/photography places have any clue about it, nor do the craft stores. Does anybody know where I can get something like that?

I have to replace a projection screen in an auditorium. Ambient spill light is bad enough on the current screen but they want the new screen to be hung lower. I measured a full-stop more ambient light where the new bottom of the screen is going and need to extend the barn doors substantially in order to get the hard-cut line needed to light people on the stage without washing out the screen. I made some extensions, turning 6" barn doors into 16", out of 30AWG sheet metal and high-temp paint. That worked perfectly for the ECT barn doors, as I could just tighten the bolts to hold them in place. The DeSisti barn doors won't hold the weight and regular construction paper just plain DID NOT WORK. :D I can't use regular flags and C-stand parts due to the construction of the ceiling.

Really, that stuff we used in television would be perfect. Any advice would be much appreciated, thank you!

-

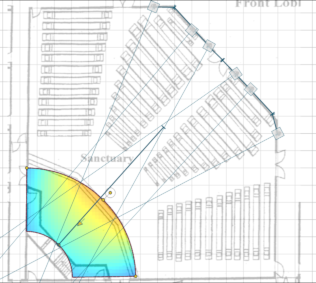

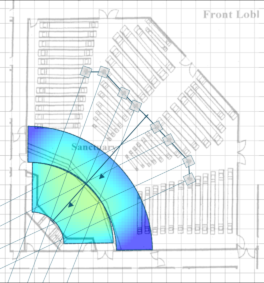

This is sort of off-topic to cinema but related. I was hired to set up a church with a video streaming system. Since they were in a hurry to get up and running, I set up temporary lighting on their balcony going purely by instinct (and a light meter of course). I have the entire stage lit within 1/2 stop using six Fresnels and it looks good on camera. I want to be a bit more "scientific" for the final version, where the lighting will be mounted to the ceiling and there will be eight fixtures total. I did an experiment where I replicated my temp lighting in EASE Focus, which is speaker coverage software. I chose a speaker model that radiated (in the high frequencies) similarly to a Fesnel and adjusted the virtual test frequency till I found the one that most closely matches the lights' beams. See attached. The results are consistent with my practical observations with measurements taken at eye-level. The map is set to cover a 1-stop range where bright red (none showing) is the equivalent of +1/2-stop, deep blue (none showing) is -1/2-stop with green/yellow being on target. The 2nd pic is a quickie experiment for a possible lighting scenario; much better than the current temp lighting with everything within 1/4-stop (but probably can't fully trust it).

Sound does not behave the same way as light, as similar as it may be in some respects. Is there software out there that is DESIGNED for lighting? I feel like that would be a lot easier to do than speaker placement software for certain. Choose a fixture type, beam-width, power etc. place it in the room, adjust the angles and barn doors, done. BTW, the grid is 5' spacing. It's a pretty big stage. The 2nd "zone" in the 2nd pic accounts for the difference in floor elevation as they sometimes have people talk in front of the stage.

Thank you!

-

I look forward to seeing some shots!

Music videos have always been my favorite projects to shoot because you can get away with just about anything you want. If you can pull off some effect, great! If it doesn't work, it's part of the art form, so no big deal. It's like when I shot a music vid and luma-keyed in some ethereal junk in the background just because it was a plain black background otherwise. The luma key ate into some of the foreground but that just made it cooler!

-

That looks cool! I love practical effects. One of my favorites for a music video was a shot that waved and distorted. Nobody believed I didn't use some computer plugin (and this was in the 90s), I just shot downward through a Pyrex pan with water in it.

-

1

1

-

-

I found this explanation on a site that's largely dedicated to matte paintings https://nzpetesmatteshot.blogspot.com/2016/01/mattes-maketh-musical.html

"any color that had more green than blue could not be reproduced. Green and yellow, for example, were missing in action. Yellow turned white, green turned blue-green (cyan). That's because, by definition, green and yellow have more green content than blue. (Yellow = green plus red.) Excess blue was poisoning those colors.

Vlahos realized that if he could add just a little density to the green separation in those areas only when it was being used as a replacement for the blue separation, he could get those colors back. He needed a film record of the difference between blue and green, thus the Color Difference System's name.In a pencil-and-paper thought experiment, Vlahos tried every combination of the color separations, the original negative, and colored light to see if he could achieve that difference. The magic combination was the original negative sandwiched with the green separation, printed with blue light onto a third film. The positive and negative grey scales cancelled out, but where there was yellow in the foreground (for example) the negative was blue and the green separation was clear. The blue light passed through both films without being absorbed and exposed the third film where there was yellow in the original scene. The same logic produced a density in green and in all colors where there was more green than blue. This was the Color Difference Mask, which in combination with the green separation, became the Synthetic Blue Separation."I've learned so much about Hollywood effects from that site over the years. It's really worth a look if you haven't seen it already. It actually inspired me to do background (oil) paintings for a recent series of promos I shot instead of finding real backgrounds or making them with CGI. They weren't exactly 100% realistic but the cartoonish action of the video made the paintings work well in this case. Plus, it gave a unique look to the final product. -

It sounds like you have the ideal rig already. It may take some experimentation but you might try making a frame that can bolt to a tripod with a high grade front-side reflective mirror (so you can get away with a much smaller, thinner mirror) to put it almost right up to the camera.

-

Why not do a foreground painting? Put a sheet of glass between the camera and shore, then paint what you want onto the glass. If you paint them so some real elements show through gaps in some cookies, it will have more "life" to it. There doesn't need to be a ton of detail so you can get it done very quickly. You could probably comp in a second pass of the painting with a little bit of motion, for the cookies right at the water line, bobbing with the current.

-

Thanks for the links. If I was smart, I would have downloaded the entire page when I found it. There's at least enough information between the links you guys provided to refresh my memory on how it was done.

-

Excellent, thank you!

While I was hoping to find the static web page explaining the process in detail, that video goes into a lot better detail than most, including the often overlooked cheat for the blue separation.

I thought there was another trick where a blue/green difference separation was created to use in place of the blue separation...or something like that.

I recently regained interest in this process because I was doing a green screen comp where spill was really obvious on a VERY fuzzy, mostly gray sheep when it moved quickly. The green spill suppression wasn't as effective as simply replacing the green channel in this case and the change in color was barely noticeable. That got me to thinking about how to change the channel matrix to get better color in other situations, should the need arise.

Thanks again!

-

Hello all;

I found a web page years ago that explained the traditional photo-chemical blue screen process in brief and easily understood but fairly complete detail. It talked about how a basic composite required something like 14 film elements, the issue with blue spill and how a green/blue difference element needed to be created in place of a conventional blue separation for the foreground. I can't seem to find the article now (which had great illustrations as well). Does anybody know where it is or if there is another resource that does into that level of detail? There's hundreds of sites out there that talk about the process but are dumbed down to the point of not really representing how it was done (many say the green separation is simply used in place of the blue separation, which was rarely true.)

Thanks!

-

Well, when you compare it to "Keylight", it's really very similar, though Keylight has the extra processes built into it. I just have a template set up for the process and I tweak it for different situations.

-

Sorry if this is in the wrong forum or against the rules. If so, feel free to let me know and/or remove it.

I noticed that there's tons of videos of people using either the built-in chroma-keyer or a 3rd party plugins for green screen et al comps and none using the method I use, which I feel works much better and best of all, is free. Let me know if you have any questions.

-

Cough

Is it $1000?

Does it have a proper optical block?

Probably not

-

I'm far from being an expert, but it seems like 2k is about as far as you can go with a s16 sized sensor, while still retaining a reasonable native ISO. At that resolution, wouldn't an OLPF need to be fairly strong to counter aliasing and moire? Wouldn't that in turn lead to a level of softness that was unacceptable by today's (very sharp) standards?

Ah, so, 2K on a 2/3" sensor (regular 16mm) is about as dense as you can get without sacrificing performance, this is true.

As for the OLPF, you have to figure out where your compromises need to be. With a conventional single-sensor camera doing its demosaic process internally (usually bilinear, nearest-neighbor in really cheap cameras), you'd have to limit the resolution to about 500 lines to avoid ALL aliasing (and that's what the first prosumer HD cameras did). However, you can "get away" with a less aggressive OLPF to allow for somewhat higher resolution while allowing a little aliasing that most people won't notice. Now, move the demosaic process to a computer, where you have much more power and no longer need to be real-time. You can use a more sophisticated algorithm that can figure out where true fine details are by looking at contrast changes across all three color channels and adjust its averaging from one pixel to the next. In the attached image, we have an image that (I believe) was shot on film, scanned to digital and converted to a Bayer pattern (left) to show what the processor has to do. The middle image is a bilinear interpolation, which fills in missing color information by taking the average of adjacent pixels. Despite the source image being free of aliases, there's pronounced aliasing in the center image because the Bayer pattern essentially sub-samples the image. On the right is a more processor-intensive algorithm that can be performed on a computer.

So for a single-chip camera, one could use an OLPF that restricts the resolution to, say 900 lines and have almost no aliasing upon output. A camera with no OLPF at all may resolve about 1000 lines with a really sharp lens, but any details above that get "folded" down into the sensor's resolution as aliases. The "sharpness" people perceive is not so much the extra 10% resolution, but false details that didn't exist in the real world. One can also increase perceived sharpness by increasing the contrast in the 800 line range. Vision3 film is actually lower resolution than any of its recent predecessors, but people say its sharper because the contrast increases in around the 40ln/mm range, or roughly 600-800 lines if you're shooting on 35mm. Vision1 could easily resolve 5K (as opposed to V3's 4K) but it had a more gradual roll-off in contrast leading up to that 5K, so it looked less sharp. Of course, if you push the contrast of the fine details too far, it looks like cheap video.

Sorry, I'm sure that's way more complicated of an explanation than you were hoping.

I'm sure you're right in this, but it sounds like you are describing a camera that is nothing but a sensor, a lens mount, and a recorder. What about all the software and circuitry necessary for EVFs, monitor outputs etc? Can all this be achieved for your sub $1k target?

My prototype will have an HDMI port, which will initially feed an inexpensive LCD panel. The preview will not be full resolution and possibly not even color, depending on how much processing overhead I have, but it will be enough to accurately frame the shot and that's better than I can say about 90% of the optical viewfinders out there. One might use the same port to feed an EVF, but that is a later experiment.

Really, I'd love to see them. All of the acquisition formats that I know of were all composite. 1", 3/4" even betacam was pretty much composite

Betacam is a true component system, roughly equivalent to 4:2:2. Almost any switcher could be converted to component by replacing the I/O cards. Betacam's main advantage is its component recording, so if you're going to use composite infrastructure, you would be in better shape sticking with 1".

Still, Digibeta and HDCAM were both chroma subsampled capture devices, nowhere near the amount of color information we have today. The mere concept of 12 bit 444 wasn’t even around until more recently.Considering the best practical performance you'd get out of a single-chip camera is 4:2:0, I still don't understand why this is important. You said yourself, we aren't producing DCPs, we're producing ATSC, Netflix and You Tube video.

I switched to digital in 1998/1999 ish and that was the first time I used component.That's the same time that I switched to digital, but I was using Y/C component before that. Less expensive than RGB or Y/Pb/Br but sharper than composite and no dot crawl. I couldn't afford "professional" preview monitors, so I got a pair of C64 monitors for $20! I still have a slightly modded one and use it with my 16mm HD film chain. :p

Nah, 400 ain’t enough as the only ISO. There are times you need 800, sometimes due to location issues, sometimes due to a look. I mean I push 5219 (500T) a stop when I need to and it comes out great. I think 500 - 600 would be fine as a base ISO, but 400 is just a bit too weak.OK, so use +6dB gain then. Either way, you have to live with some extra noise or add DNR and live with lost details. 500 ISO is not even 1/4 stop difference. Unless you're shooting on something like an Alexa, you're not going to get TRUE 800 ISO performance without compromise (I know what BM advertises, they're 400 ISO cameras). I find 400 is already difficult to shoot outdoors as the base ISO. You have to have a 3-stop ND just to get F16. If you want to open up to F8 or higher to get a sharper image, you better have a top quality ND filter to avoid more IR contamination.

Meh, I love the look of large imagers.Yet your main camera is a BMPCC? There's nothing wrong with S16. You can get shallow DoF if you want or long DoF, depending on your lenses. The image plane is large enough to get sharp images out of mediocre lenses and really good lenses can be cheaper/more compact than 35mm lenses.

S16 is 7.41x12.52 and Academy 35mm is 16x22.S35mm 3 perf is what I shoot these days and it’s 14x25 which is exactly how it’s presented digitally.

Don't forget the "guard band". The actual film apertures are slightly smaller than the available area to avoid problems (like seeing the sound track or perfs) due to gate weave on that $5,000 counterfeit Indian projector head they used at the $1 theater.

Not me, I’m usually on the borderline of wide open. I love shallow depth of field.A very common trend these days. I'm not putting words in your mouth but I suspect most people do it as backlash against the 1/4" and 1/3" sensors previously found in prosumer devices. My budget minded contemporaries complained endlessly about not having selective focus in those days and we (me included) fought tooth and nail to get our lenses up to F2, which made for a pretty crappy image. In retrospect, I should have been aiming for optimal image quality rather than "film-like". Getting back on track, it's gotten to the point of being ridiculous, with product reviews being shot on full-frame DSLRs with long lenses at F1.4, insuring only 1mm of said production is visible at a time. I suspect people will consider shallow DoF a very dated look in the future, just like how the advent of cheap digital reverbs and keyboards makes a lot of 80s songs sound dated now.

Put it to you another way, I couldn’t afford to make movies if I needed to travel with an old school ENG camera everywhere I went.I admit ENG cameras can be a bit cumbersome, particularly the support. However, I don't think I've ever heard somebody say "Awe man, I can't fit my camera in my pocket, better cancel the shoot!". My prototype camera should be about the size of a GL1, so it should fit in a decent sized bag and not draw too much attention from authorities on-location.

-

2

2

-

-

Rolling shutter can be partially compensated for in software, or by increasing the read/refresh rate of the sensor, which requires more powerful processors. Aliasing and moire can be fixed, either by an OLPF or by increasing the resolution of the sensor. Trying to fit high resolutions onto a s16 sized sensor is going to mean small pixels, which in turn means relatively low native ISO.

That's what we've been discussing. Software solutions take time and don't do a perfect job. It's better to make a camera that doesn't have the problem. As for the OLPF issues, the solution is to use an OLPF. It's the only way to get optimal image quality.

The point is that all of these issues can be dealt with, just not for less than $1000. Honestly, don't you think someone would have done it already if it was remotely economically feasible?Sure they can. There used to be several cameras on the market that had global shutter and were relatively free of aliasing for around $1,000. That was back in the CCD days, and CCDs cost about 5x as much as CMOS for equal size, so sensors were generally fairly small. With the new crop of global shutter CMOS sensors, none of which are being used in camcorders, there's no reason it can't be done with a somewhat larger sensor now.

The road block in the current market is that a lot of processing power (and licensing fees) are added by capturing in a conventional video CODEC. Three color channels must be interpolated, gamma transformed, gamma corrected, white balanced, then converted to YCbCr, saturation boosted, knee adjusted etc. If it's a better camera, a "black frame" average image is subtracted from the source to remove fixed pattern noise. Then it has to be converted into whatever CODEC they want. ProRes is not especially processor intensive, but there's fees involved. H.264 is an open standard but requires massive processing power. All that has to be done internally and in real time, so corners have to be cut elsewhere. It's actually CHEAPER to have a minimal processor running fairly little code to capture the raw signal, even if storage requirements are a lot higher. That doesn't bother me, I already have a couple 240GB SSDs that could give me about an hour each of record time of raw HD. It's really no different from back in the film days where you had multiple magazines, except now we can dump an SSD to a laptop and reuse it on-site if need be.

-

Wait, what about composite video and demodulating issues and dot crawl that was "the standard" until component digital which still had lots of issues?

We've had viable ways to avoid composite video issues since the early 80s for production. Delivery may still be composite, but delivery media have always sucked and probably always will, though I find Blu-Ray can be quite good when done right. Even my first paid video productions in the 90s stayed component till the print.

Single CCD's have HUGE problems, you make them out to be magical, but they still use a "color filter" to assign certain pixels to certain colors. They're also nowhere near as efficient as CMOS in terms of ISO.I don't make them out to be "magical", just that they don't share all the problems of CMOS like you say. As for "efficiency" in ISO, CMOS does it by artificial means. Weak color dyes (not always, but in many situations) are used to let more light hit each pixel. Since every pixel has an amplifier next to it, it can't possibly gather light as efficiently as CCD, but makes up for it with internal gain whereas CCDs are passive components, containing no gain of their own.

Well higher bit depth codec's and better chroma sampling, make it a lot easier to color in post.I find the current trend of making the look in post to be tedious and produces a very alien image that can be outright nauseating to me. So, I might make slight tweaks to white balance or level but that's it.

Probably because modern cameras look so crisp and look so cinematic, they kinda ignore the issues.I suspect "cinematic" is one of those meaningless buzz words like "warm" in the audio field. Many iconic movies were not crisp at all. Many DPs went out of their way to soften the image, especially on close-up shots, to the point where specialized diffusion filters were created to gradually soften the image while dollying into a tight shot of an actor.

I don't know of any "industrial" 3 CCD camera that is 12 bit 444 color space and shoots 4k or UHD and captures RAW to allow full imager bandwidth into the post production bay. What about the large imager look? Can't do that with 3 CCD's. What about high ISO? Can't do that with 3 CCD's.400 ISO isn't enough? Almost all of them have gain, you just don't have bake-in DNR like CMOS cameras do. You're right in that there aren't any UHD CCD cameras (as far as I know) but 12-bit and 444 is somewhat common for industrial cameras. While single-chip cameras may have a 444 option, that's not what the sensor is outputing, so I'm not sure why it's that important. The HIGHEST density color on a single-chip camera is green, which is only every other sample. The other colors are 1/4 resolution. These colors are merely averaged together to get complete color channels, then converted to luma/chroma channels. If you are using RAW capture, you have much better interpolation algorithms available on your computer, but you're still dealing with "educated guesses" based on localized and non-localized spatial analysis of the frame.

Rolling shutter isn't an issue thanks to the software fixes and modern cameras having very fast imagers and processors.If you think so, I trust that you have become numb to the issue.

Nobody wants a S16 sized imager, everyone wants S35 minimal and MOST PEOPLE want a full frame imager to get that super "cinematic" look.Bull crap. Marketing mooks may have the brainless masses convinced that VistaVision sized imagers are the only way to get a "cinematic" look (there's that word again), but the fact of the matter is that the vast majority of film material has been produced in flat 1.85:1 35mm (21mm x 11.33mm) and Academy (21 x 15.24). On top of that, most cinematographers fought to get longer DoF, not shallower. Indoor shoots were routinely done between F5.6 and F8 unless there's a reason to do otherwise. I don't have the exact percentage, but very few movies originated on VistaVision or 65mm. The standard in Hollywood these days is S35. Bear in mind, almost all broadcast is done with 2/3" sensors (regular 16mm sized) and you're personally pushing the BMPCC, which is S16 sized.

Again, I don't think the D16 looked good at all. Yes it may technically be better, but the look of the imager isn't something I'd ever want to shoot with. Also, good luck getting a decent noise floor at over 1600 ISO, which is basically the minimal one needs these days.Once again, that's your fault that you don't know how to grade the image. It's truly as clean and unprocessed as it gets. Do you not own lights? Why do you need 1,600 ISO for professional work? Have you not paid any attention to my remarks about CMOS cameras having DNR built into them? If you want a noiseless image at absurd ISOs, you need DNR and the D16 doesn't do that automatically.

But it adds a bunch of NEW issues that the Pocket doesn't have, including size.If size is all that matters, keep your BMPCC.

I'd say the top 3 reasons I own the pockets vs something else are: size, price, codec's. Size is pretty much everything for me because I shoot documentaries and I can't afford to have a big camera case to lug around.OK. I've shot plenty documentaries on conventional video cameras and even CP-16 without issues.

-

1

1

-

-

In terms of some of your complaints... I mean who cares?...So ya either give in and realize it's never going to be perfect and your audience doesn't care or ya just muck around for a while and realize the only person who does care is you lol :D

I don't expect perfection by any means, but the fact that nobody cares about easily rectified problems that would have been considered inexcusable in pro video 15 years ago really tells how standards have changed.

How is it we live in a time where obvious banding and macro-blocking are worse than ever, yet we insist and having more than double the pixels the eye can see?

How is it nauseating, gelatinous distortions caused by movement is acceptable in cameras that cost as much as my house?

Why do people insist on capturing with the "best" 10-bit or 12-bit CODECs to guarantee optimal image quality but it's OK to leave out a simple part that prevents strange colors/patterns?

Maybe I'm in the minority for being bothered by those things, but considering there are currently ZERO camcorders in the sub-$20,000 market that are free of those problems, shows me that there's a niche market not being tapped. You just got me more charged than ever to try and make my prototype and if even one person is willing to buy it, I consider it worth while. Imagine, an HD camera with no rolling shutter, no alias/moire, raw capture, S16-sized sensor, pure color, all for $1,000. That sounds pretty much the same as your BMPCC except free of all the annoying problems. Sure, it will be larger, but I'd rather have a camera I can hand-hold if needed. Is "pocket-sized" really worth all the problems that come with it? Maybe to you, but not me, I can have that for free on my cell phone.

I got most of the parts I need on order now. Some of them are coming from England and the programming side is rather foreign to me, so it may be a while before I get to testing. I'll be sure to post results if I'm successful though.

-

1

1

-

Mixing tungsten and daylight on digital black and white

in Lighting for Film & Video

Posted

Just remember that red stuff will appear slightly darker under the daylight balanced light, blue stuff looks slightly darker under tungsten balanced light etc. But yeah, I wouldn't worry too much.