Russell Scott

-

Posts

42 -

Joined

-

Last visited

Posts posted by Russell Scott

-

-

That reminds me of a favorite quote:

"In theory, there is no difference between theory and practice. In practice, there is."

-- Hmmm, instead of giving everybody the name, let's guess who said it. ;-)

-- J.S.

*stokes fire*

The irony is that Yogi revolutionised the animation industry by introducing a cheaper and more production efficient techniques at the expense of established animation rules. Animation purists were outraged at this assault of standards and looked down upon such techniques as a cheapening of their craft. The public didn't care of course, caring only for expressive, interesting content...

Not that this has any relation to any dicussions that happens so often here ... :D

-

This is really interesting, I'm just starting work on an animated short now and its really fascinating, I was being told today that in Maya light intensity from the virtual lamps work in a linear fashion, not in by 'inverse square law.'

To be clear - you generally have the choice between no decay, linear decay and inverse square decay. Having a light that decays physically (i.e. inverse square) is not the same as photometric (although of course photometric lights will all decay physically).

-

On big features you'd have specialist lighters. They would light an animated feature like you'd light a live action set. You have lights that you place in 3d space, give them an intensity, a colour. Most 3d systems have photometric lighting setups so conceptually its pretty similar.

In some respects its easier than live action, because you have no physical limitation on light placement (e.g. you can light a scene with a light in front of the camera but hide it from the camera so the light itself is invisible).

In other respects it is much harder, the 'photometric' nature of the lighting is based entirely on approximations and that leads to much wrangling to get the desired result. You also don't get instant feedback, so when you move lights around you don't see how that light has affected the scene, you have to crunch the numbers on the render machine to get the result (which could take hours).

-

It's good how a potentially useful discussion can get ruined by a COCK fight.

Posting in a public forum, with your whole name, about a film you're working on and then casting aspersions on the directors abilities was never going to end well. It wouldn't take 2 seconds to find the director involved - airing grievances in this manner is not OK.

With friends like these...

-

:o Really??? What?

Hugo Cabret

-

In this instance though, if instead I digitally converged the shots so that the foremost element was whatever is closest to the camera, do you think that would be worthwhile? Meaning it would produce the window effect, even with the hyper-stereoscopy. I'd imagine the converged section of the frame being not what the content of the scene asks you to focus on would also produce a lot of eye strain....

When shooting in stereo are we virtually forced to never include anything in the foreground that isn't contained perfectly within the frame, and is that just a nature of the beast? What are your thoughts?

I don't think its worth converging the shots, simply because the extreme nature of the stereo in the shot means it won't help. In this case it isn't just the foreground elements that are the problem. Often there are objects captured in one eye and not the other, or captured with very different perspective vanishing points; converging the stereo differently won't help.

The general answer is yes, you are forced to never include objects coming out of the screen. You can do it occasionally with quickly moving objects because the mechanism with which the eye tracks objects is slightly different at pace (poor explanation, sorry!).

You can also get away with objects in front of the screen if your stereo is minimal, but its best to avoid cutting the screen (left or right sides) an even then, generally inadvisable.

-

You mention wanting to avoid elements coming out of the screen - would it perhaps be less distracting, considering this scene is already shot, to digitally adjust the convergence to the foremost element, and let the viewer converge their own eyes to focus farther into the screen where the actors may be? I'd imagine that would be even more distracting (with this given footage) and the only solution is to keep that sort of thing out of the frame altogether henceforth, unless it's totally contained in the shot?

Its not really going to help that much, adjusting convergence only changes where the depth starts and stops, not now much depth you've locked into the scene. In this case the problem is the depth and there isn't really a 'post' solution. Having said that, you very rarely want objects coming out of the screen. Generally you want to be staring 'into a window'. For various reasons images coming out of the screen are more taxing than those going back. Pushing convergence too much also has other problems especially when you have strong perspective cues (and you convergence doesn't match those cues). you can see that when she opens the drawer at 0.12. The perspective strongly conflicts with the convergence you've chosen. (best shown using the 'parallel' mode on youtube)

At a glance I'd say your stereo is about a minimum of 10x too strong and for some of the close scenes about 50x. One trick you can use to get away with having too much stereo is to film with a close background. So that even though the depth is too strong, the amount of depth visible in the scene is limited (this is probably why when you 'blew up' the image it worked. Generally digitally zooming in stereo simply increases the overall stereo)...

-

Hello all!

Here's a test scene I shot with a home-built stereo rig and two DVX100s side-by-side. You'll need to go to my actual channel to be able to access the drop-down list to choose your ideal method of watching it in stereo - I think it defaults to cross-eyed, which is probably the best way if you can get your eyes to work like that.

I'm hoping to shoot more 3D! I'd love to hear thoughts on what you feel works and what you feel doesn't visually in this piece.

Best

Mike

Hi Mike, what is your intended transmission format? i.e. do you hope to put this on a TV or screen? If so, your camera separation is too much. A DVX rig is about 13cm side by side (?) which means for semi comfortable stereo on a tv you'll need the zero parallax at ~4m away(and nothing in front of that!). For close character shots with a dvx you'll definitely need a mirror rig (you can get suitable ones for the dvx for about 3k i think).

You've also chosen a lot of shots with strong foreground elements, more specifically, elements coming out of the screen. You want to minimise this as much as possible, especially with strong stereo. The example I'll point to is at 2:40. The foreground element is both way too strong and acts as a distraction, your eyes are drawn to it rather than the action, also as you pan the camera left and right, the foreground element cuts the screen, which -particularly for foreground objects - is not good. At 2:51 you have an element in one eye and not in the other, this = pain for the view. You also need to watch your reflections - in a couple of the shots there are stray reflections in one eye and not the other.

hope that helps in some way...

-

Got a chance to play with a live 3Ality rig in Bexel's booth today. Learned you absolutely don't want to change interocular within a shot but convergence can be pulled creatively.

Hi Hal,

I've done this a few times. Its particularly effective doing this with a vertigo shot. Much harder to do with physical camera of course and if you don't want to noticeably stretch the depth you have to have a distinct kind of shot to use this on. e.g. going from a long shot to a close in shot... "flying over a bunch of bunch of buildings then flying down to see our hero walking along the street.. camera ends up framing his face". It is possible to smoothly transition from showing the depth of the buildings to the depth for a closeup.

but generally you wouldn't want to do it...

-

Hi Herc.

The ideal interocular distance is about 64mm or about 2 1/2 ". That's the average distance between human eyes.

jb

just to expand on this. The ideal i/o isn't 64mm for the camera - you want an apparent 64mm separation on the projected image. The bigger the screen and closer the audience sits to that screen, the closer your cameras will need to be (also dependent on what your camera is looking at and where that object is in relation to the camera). The i/o of the cameras should be chosen with some reference to the medium its projected in, eg a 64mm camera separation for TV might be fine but not for cinema. This is one of the main reasons people get pain in cinemas - the i/o that is chosen is far too much.

and as a separate point I would also argue against trying to mimic perfect depth and go for the less is more approach, though that's is just my opinion...

-

If you're worried about finding work in film you really should look into a different field.

R,

but I worry about it daily. :blink:

:lol:

-

This sounds interesting, here's an article:

http://www.televisionbroadcast.com/article/88078

I'm wondering how they deal with interaxial distance....

-- J.S.

if I were to guess, the left and right images are only using a fraction of the lens, and the CCD will be small too. this would act as a separation multiplier. Or since its likely this is only for close action, perhaps it will only have a maximum effective separation of ~1-2cm and you need to have a long lens on it....

but I'm only guessing. very interesting

-

LCD projectors do polarise the light, there are ways to use them, but there are more problems. dlp is the way to go.

for the rest it depends on how fussy you are.

it is *possible* to watch stereo via a white screen, but its generally going to be terrible, with lots of ghosting. same with cheaper filters (careful not to burn your filters) - you get more ghosting. you can even paint a wall with aluminum flaked paints and you'll get a reasonable result but silver screens are the only way to ensure a good result.

-

Hi Russel,

thanks for your reply.

as you know there are different point of view.I prefere don't toe-in and do it in post but there are different stereographer that prefer do it in shot.This was the reason for my question.

I don't understend your last sentence(about film plane).Could you explain it to me please?

My question was:what is it the right point(pivot/rotation point)to toe-in the camera?

All the best!!!

yes I figured you'd say that (about hte toe-in/ no toe-in :P )

my answer was that, as long as we're using the same phrases, the film plane is the correct rotation point. (I've never called it a 'film plane" so I'm assuming what you mean by that). Having said that, your keystone won't be improved, but your stereo would be.

Keystoning is a result of the vertical parallax that toe-in introduces, so while the keystoning would be more accurate when you identify the correct rotation point, it doesn't follow that by correctly rotating , your keystoning would be reduced.

an example would be if your original rotation point caused you to effectively rotate the cameras *less*. if that happened, your correct toe-in would increase the keystoning.

-

Hello,

special 3D stereoscopic question:

is it better toe-in on the lens' nodal point,film plane or camera's body?

If the convergence(toe in) can be adjust on the lens' nodal point or film plane,will the keystone effect be improved?

Thanks a lot :rolleyes:

can I suggest you don't toe-in at all? then you'll have no keystone effect...

if you do toe-in, you'll need to find the film plane - assuming we're using the same terminology :lol:

-

Not quite. That means they'd need to sell 5x as many to get the same amount of money.

Remember, VHS was $29.95/copy when it first came out.

The lack of understanding of simple business on this site is staggering. You people all work in the industry, right? Chad? How do you actually feed yourselves with ideas like these? That Starbucks job on the side in addition to your "real" job? :ph34r:

actually that's not true either... :P

for one thing your expenses are greatly lowered, your middleman is removed and in some countries taxes are less.

-

There is another problem though, isn't there? If optimal seating is in the middle and at least half way back, doesn't that mean that over half the audience are losing out?

And forgive my ignorance, but is the 'screen size':'depth of cinema' ratio always the same? I thought there was some variance, which in turn would affect the best seating position in a 3D screening..? I had originally expected someone to say something like "sit 3x the width of the screen away."

thats yes and yes! in relation to the last question, generally you find that up the back(ish) gives the best result not just for the width/depth ratio but also because you are higher up and hence the image perspective and the stereo that your brain generates are closer. The problem is that many theatres aren't designed for stereo, they are simply converted to stereo projection. this lack of standardisation - coupled with the fact that many don't even realise that screen size makes a difference - makes it hard to give a direct answer of where exactly to sit.

-

Yes, yes, I know, everything's perfect and you've solved all the problems and I'm just wrong. But:

You keep saying that. I'll keep throwing up. Deal?

P

Actually I've clearly stated there are problems. What you're describing as the problem is in fact *not* the problem. There is so much misinformation about stereo - I'm trying to help address that problem, I've posted in a number of these threads.

Exaggerating the stereographic effect on, for example, a landscape, will not produce strain, unless that exaggeration is done incorrectly.

As I hinted, I don't really get your position. If a car company produces a blue version of a car you prefer in white, surely the option you take is just to buy the white one? Why complain about them giving you options?

-

The best place to sit is generally close to the back - this will put you in the original position for the stereo images. In other words you are trying to put yourself the closest you can to the 'camera view', it will also minimise any gain created by the silver screen (if it has one). having said that it is possible to change the 'sweet spot' of a cinema to any point at all, not that cinemas will do that - just that you can.

Phil is correct that there could still be problems, but is very wrong in his description of them!

The separation/convergence of the cameras has nothing to do with the problems viewing stereo images causes. Yes you can exaggerate stereo but the eyes are easily capable of dealing with that (your eye can converge to your nose through to directly parallel, so no amount of extra convergence will tax them. The problem is the focusing mechanism of the eyes. The eye must gather their 'stereo' information from the screen, if they then must converge something with too great stereo, they cannot maintain their focus on the screen (but will try to) and thus the eyes are strained.

Its actually very easy to control - there is a zone in which the eyes do not strain in their focus, but like cinematography there are people who are good at it and there are people who are bad at it.

In any case, 3D is a sham. If it was really 3D, then it would matter where you sat, as every seat would give you a different view of the scene.Yes. This is 2.5D at best.

for stereo, it does matter where you sit (but obviously not in the way you are meaning), since every seat will give you a different stereo parallax. The disparity of the stereo parallax vs the actual image parallax is why the side seating is less than ideal.

Well, bollocks, it still makes me feel sick and that's not my fault. I can only hope that the major 3D systems start offering people the option of glasses with two identical lenses so that we don't have to suffer this literally mindbending mess if we don't want to.why not just go to 2d screenings of the same movies, then you won't even need the glasses?

:lol:

-

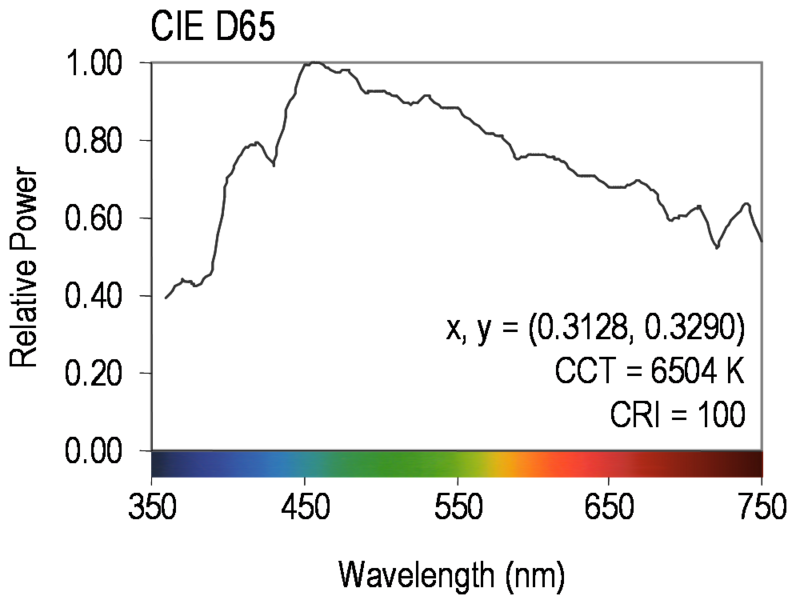

Is there an exact white point for the eye? I'm talking about reality and not about standards. Would an "eye signal measurer" (imagining it exists) be able to difference between an eye that is receiving pure D65 light and one which is receiving a slightly green light? Basing on the "quality of the green signal" to report "it is not receiving pure D65 light"? Then isn't staring at the screen for long times much worse than adjusting your eye to a little green?

I am talking about an environment with a "green", let's say, near X=0,3 Y=0,35 or maybe a bit more (watching the C.I.E. graph and supposing how far this "supposed green" might be from D65). (Also watching the CIE graph and tracing a circle of all the similar color hue shifts from D65, this difference could be around 6000ºK if talking about blue shift instead of green.

ok i'll bite again.

the D65 standard comes from the averaged spectrum of midday sun. the D65 standard it isn't pure light (pure light would be a constant gradient of all wavelengths). Its the average spectrum of light that most closely mimics midday. However you've answered your own question too by quoting the two effects i linked, there is no exact white point for the eye, that changes according to the intensity of the available light. You do however need a common point to work from the d65 has been chosen (for other reasons not just relating to film). The 6500k holy grail is actually 6504k, its determined by theoretical physics relating to black body radiation, all of which artistically is irrelevant. having said that, by choosing to stray from the standard you are choosing to risk imperfections in your work.

Again, it all depends on your need/want for perfectionism. My films get projected into theaters that don't calibrate their projectors, thus each theater has a substantially different setup - i.e. little point me losing sleep over a 500k difference. the larger the budget, the more controlled the environment, the more I'll care!

-

I already said, my question is not about things that are written on standards or books. I try to give it another perspective.

I feel my questions go a bit deeper in the matter. It's more related to this question:

It seems, as I've read it was stated on the other forums, you're here to tell people you're right, not learn or enter an actual discussion.

you're starting from an incorrect theoretical standpoint, and then trying to draw conclusions from that.

The "standards" that you are trying to ignore exist to try prevent you from making the bad assumptions that you have made.

If mom's TV's image is reddish, then her eye will get used to it, and she'll be in the standard, and her brain will not always say "how red!so grade for moms TV, but of course Dad's TV is neutral but because you graded for a red TV your grade will no longer work. worse, Bob's TV is bluish, so your grading has even more significantly appeared wrong on his TV.

If you grade for a specific broken,non-standard system, then when they fix that system, your work won't be graded correctly - you have no longevity of work.

all your questions are answered in my above post, look at the spectral power distribution of the d65 standard vs different light sources. This will determine your ability to maintain a consistent grade, since you have a more consistent spread of wavelengths being emitted bit your rooms light source. i.e. you will be better able to judge the composition of wavelengths in the image being corrected for. (if you look at hte peak power it more or less corresponds to the weakest response from your eyes as in this link: http://upload.wikimedia.org/wikipedia/en/1...ones_SMJ2_E.svg )

you've also talked about what seems to be a dynamic colour grading. i.e. Bringing in more colour over time.

Again, "getting used to" a colour does not mean "seeing it as white".

the real questions should be asked: does this match the narrative? should the audience be subjected to more colour if the script calls for less? obviously no. these decisions should be up ot the director/cinematographer, or more holistically the story, not the concern that the audience won't "see" the colour.

i'm going to leave this discussion now, lest i get argumentative :blink:

-

So, what I say:

First. Comparing 2 situations:

- Slightly green backlight - slightly green display. Both the same. (So slight that the eye gets used in 15 seconds)

- Daylight backlight, daylight display. Both the same.

I'd say that as every human being's eye balances the colors, then both situations should be the same, or almost (not something significantly different). And I say this because my last backlight was greenish, then I corrected the levels of the LCD to match it, and then I tried correcting a grey card with blacks and whites, and I got good results (when checking with the vectorscope).

Can someone contribute something about this? Pros? Cons? (Apart from "filter the light and correct the screen" or "It's not in the standards, that's why you should never correct there"). I think that the one who can answer this must be somene with experience, not in reading SMTPE publications, but experiencing the real thing.

i.e. * -Does the "vision quality" lower when the eye is not Exactly in D65? Why?

-A green alien shown in this greenish LCD with the greenish backlight will look greener than normal. But as the eye gets used to the environment, the alien will look the same green level as it would look in a perfect SMTPE environment. Wouldn't it?

--

Shouldn't we grade thinking on what the viewer will actually see?. If their eye will get used to our slightly blue scene, then why should we use the same grading for all the scene? I know, I know, "the client will not trust the colorist". But I say again, I am talking about my situation, or student's situation.

I think of these ideas:

- Always putting a white reference in shot, like a white window or something.

- Putting a whiter shot in the middle of same color scenes so the eye "refreshes".

- Increasing the color as the time goes by.

- Put a medium grey frame around the image and a grey slug every 30 seconds :P

If mom's TV's image is reddish, then her eye will get used to it, and she'll be in the standard, and her brain will not always say "how red!".

I am obviously talking about the normal color shifts we see on consumer TVs, not about TVs with technical problems.

I really hope to find someone who can answer this with good manners, I don't want to argue.

Thanks :)

Rodrigo

there is a lot wrong with your approach, and I think the attitude you took to creative cow.

a simple wikipedia search would have told you all you need to know really.

you've started by assuming the daylight vs green backlight will have the same intensity, which is patently not true. Apart from being a different power source (sun vs light bulb) they are different wavelengths, different mixes of wavelengths and have different energy levels, thus your ability to judge contrast/luminosity will be compromised.

likewise, your eyes use different cones when viewing different wavelengths, thus by viewing it in a non-neutral environment you are shifting the ranges being viewed by the eye.

Choosing different colour environments changes where on this graph your white point occurs (the graph depicts the wavelength response of the three cones systems your eyes have)

http://upload.wikimedia.org/wikipedia/en/1...ones_SMJ2_E.svg

read up on this

http://en.wikipedia.org/wiki/Purkinje_effect

http://en.wikipedia.org/wiki/Kruithof_curve

If you don't calibrate both your computer and monitor properly, how will you know what the result is. eg. you calibrate your computer (in hardware) to 2000k but then adjust your monitor to give you a nice white point in your greenish room. your nicely white CC will infact be calibrated to 2000k. your implicit assumption is that your computer has a natural white point, you also assume that your cheap monitor gives accurate temperature results.

you also haven't considered chromatic adaptation. you have make an assumption that "getting used to" = "identifying colour as white"

An object may be viewed under various conditions. For example, it may be illuminated by sunlight, the light of a fire, or a harsh electric light. In all of these situations, human vision perceives that the object has the same color: an apple always appears red, whether viewed at night or during the day.another point is, that without balancing in a neutral environment, you can quite easily be fooled by whatever object you are looking at, you will be less sure of the colour.

you also haven't thought of the colour space compression - lets take this example to the extreme. you're in a room with colour temperature of 15000k and you adjust your monitor to reach that, your computer is nicely balanced however. So at 15000k you have got a perfect white point. only there is a limit to what human eyes can perceive (see above graph), and by shifting that white point, you have shifted the colour space closer to the limit of what the eye can perceive towards one end of the spectrum and have elongated the apparent colour space in the other. thus your ability to accurately identify the colour space is compromised

the thing is, you've probably only done this in a mildly different situation, so yeh it doesn't make a lot of difference, and what you're describing is no different to white balancing a camera. I guess it really just depends on your level of perfectionism/puritanism if you are happy to work with a reasonable estimate of the result, rather than a direct image you can confirm as the result then there's no problem.

If on the other hand you are trying to say that neutral environments are irrelevant as long as you balance the monitor to the light source, then you are wrong.

-

I've never been entirely clear on this.

I am reliably informed that binocular vision does not really operate much beyond about thirty feet; human optical mechanics are not sufficiently sensitive to differentiate any more subtle degrees of convergence than that.

The screen is more than about thirty feet away.

Is it not therefore inevitable that the entire concept of theatrical 3D is based on having to force the effect to work when it really shouldn't, forcing the eyes to converge at a distance different to that which they're focussed to, and is this why it always, even when done very well (Beowulf at the Arclight Hollywood would be a "very well done" example) gives me a blinding headache?

go back to the angular separation I talked about. yes the screen is X feet/meters away but what is actually important is what distance the eye 'thinks' it is. you can fake any apparent depth you want, simply by adjusting the separation of the cameras.

Remember, the eyes are not picking up the depth of the room, they are picking up the depth of the image.

The focus issue is very much the problem with Stereographics, your focus is different to your convergence, unlike in reality.

There are tricks to fix this, but they are expensive, time consuming and difficult to achieve...

Having said that, "very well done' stereographics won't give you a headache but your margin for error is very small. A single bad shot can strain the eyes and compound throughout a movie. Its especially problematic with fast motion strobing. Even with good stereo, the strobing will hurt the eyes - this is why James Cameron wants 48fps, strobing is a fundamental problem. Motion blur helps but the amount you need to stop the problem can destroy the shot.

-

Since the human eye is the equivalent of less than 12mm, IIRC, wouldn't longer lenses need to be farther apart, not closer together?

One other little nitpick, I said 3 inches, not 75mm. So the figure I quoted is 76.2mm if you want to insist on using metrics :P

no, its the opposite. the wider the angle, the further apart you can have the cameras (to a point). this is because the eyes have two mechanisms working, one is toe-in the other is focus. this is really easy to explain in person but quite hard to explain on paper.

Imagine the depth expands as you reduce your FOV, thus your 'safe to film' zone needs to compress too (or reduce the separation )

James Cameron Says The Next Revolution in Cinema Is…

in General Discussion

Posted

clearly... :)