Ivon Visalli

-

Posts

34 -

Joined

-

Last visited

Posts posted by Ivon Visalli

-

-

Good luck, Samuel. If you ever make it south down Long Beach way, give me a holler.

-

Oh, now this is getting good...

-

That attitude puts you in the minority of newbies. Most of the newbies that I've met haven't shown much interest in developing their skills.

I also don't believe that talent is innate, regardless of the connotation.

So, that's exactly the point I want to challenge Rakesh. Is it really your experience that most (more than 50%) of people that use their gear to get work are uninterested in developing their skills? That's not something I've experienced. Most of the camera people I meet tend to be camera and film freaks of one sort of another. Their skills and knowledge definitely vary, but still I see a passion beyond just getting gear and getting gigs. I mean if you just want to make money, there are more straight forward ways than production.

I will say I see a lot of posturing on sets, where people tend not to show their cards as to experience or knowledge gaps, but I will forgive that as anytime you're working you tend to do everything you can to keep working. I mean in general, I keep hearing about camera dilettantes, but I have yet to meet one.

-

Agreed... usually the people who get hired that way aren't very good, because they bought the gear to take advantage of people who hire for gear rather than talent.

Please note that there are some of us that intentionally use gear in lieu of talent because we're not there yet. I hope to be a very talented cinematographer some day, but I am not there yet. Maybe skilled would be a better term as talent often implies innate abilities. So, I hope to be a skilled cinematographer some day. Until then, I must work for free/cheap and give my gear away to continue to perfect my skills. I don't think we should disparage those of us taking this route. An interesting perspective from Matt Workman...

-

I also like depron and use it in front of some open face Lowel's (you have to keep it from getting too close to hot lamps as it will melt). It is a bit of a light hog and I see at least a stop of light loss when using it, but it has a very nice diffused quality that I like. I had never seen it used or heard of it until last year. Fortunately I was able to get a few sheets of the 3 mm. I didn't realize they had discontinued selling it. Does anyone know of any other source or if RC will bring it back eventually?

-

Rather than losing 2/3 stop by covering the zebras in CTO, they began using tigers. I think there was a special position created within 600 for those camera ops.

-

Thanks for the info, Satsuki. Also just finished the Art Adams article about head room that you posted. Great read.

-

Can anyone recommend a good shoulder pad for moving a tripod with camera mounted? I have a shoulder pad for hand held work, but it requires getting it strapped on. I'm looking for something that hangs from or is secured to the tripod for quick moves. The tripod legs I'm using are the Sachtler ENG-2.

-

In some rare instances a distributor will package a number of shorts together and distribute them as a "shorts collection". In general, you need to have been recognized at a film festival or fit the theme of the collection (like horror shorts). Here's one example:

-

A few thoughts:

Polarization - basics of light polarization, polarized reflections and polarizers (circular and linear) and how they work

De-bayering Algorithms - advantages and disadvantages of different de-bayering algorithms

Lossless compression - how raw compression works and how does it stay "lossless"

-

Look at the movie "Savate". Although shot in the US, it's directed by one of your countrymen: Isaac Florentine.

-

Some good suggestions here already. If you do meet with him, I would also suggest making notes after your meeting about what was discussed. I would also make sure you have an e-mail trail that shows that you arranged a meeting and if/when you met with him.

I wouldn't be overly concerned about him ripping you off, but it can happen and you want to take reasonable precautions in case something does occur.

-

Some interesting commentary on the movie "Truth" from cinematographer Mandy Walker.

http://variety.com/video/truth-cinematographer-mandy-walker-video/

-

http://professorfangirl.tumblr.com/post/108768550489/everyframetellsastory-everyframetellsastory

The above link goes to an article about the framing of Watson's psychiatry sessions in two episodes of "Sherlock".

Nice article. Thanks for that.

-

Hi Michaela,

Thank you for that information. I agree, I don't like the manufactured rain covers and would prefer something simpler. I also work in Los Angeles, so have had very little use for one. Even when I worked in NY, we moved to a cover set on the rain days we had (though a tarp was used to cover camera and gear if it ever drizzled). I appreciate your tip about using clear plastic, thanks.

-

That's really nice stuff Carl. Really shows the core issue of bit depth and dynamic range. Low bit depth doesn't stop the dynamic range from being expressed, but it does limit the fineness of the steps between black and white.

Your two left-side images show that a bit depth of 2 didn't stop the higher dynamic range "camera" from finding white and gray more accurately (I'm especially looking at the white on the bridge of the nose with the lower dynamic range image). Once in post with our friend dither, the image from the higher dynamic range camera is able to retrieve more detail in the background. Though I kind of like how the lower DR image is blown out.

Carl, do you know how many stops of dynamic range are in the original image? Are you able to add the chroma back into the dithered images, I'm just curious how they look in color.

-

Any joy on images? Are previous links still unavailable?

My server provider is trying to argue it's due to a firewall on the client side, but what firewall would deny even a single jpeg (especially if it otherwise allows jpegs from elsewhere). So I've tasked them with finding the real reason rather than some made up one.

Perhaps it's some secret nationwide firewall being tested in the States - delaying some connections until some criteria is being met (just to get into a conspiracy theory).

C

Yes, it's working now.

... they know you're on to them.

-

Clicking on the links didn't work for me either :(

-

Alas, Carl, ever since you posted Mr. McDowell with eyes wide open, I have been unable to view any of your attachments.

-

It will be the evolution of display technology rather than capture technology which determines choice of target bit depth and how many bits one might then want to use in the ADC. The DR of the sensor is not in any way shaped by this. Ideally one wants as much DR as possible, regardless of the number of bits used to digitise such or the eventual display. And conversely one will want as many bits as possible to digitise that DR, if only to have room to retone it for whatever bit display we want to show the result on.

Well, I actually prefer some limit to DR. At least at this point in my career. Given a few locations I've worked in, it's very nice that parts of them roll off into black :-)

-

Here is where it would be great if someone from a camera manufacturer stepped in! My degree is in electrical engineering, so I have some experience with ADC and digital processing, but not a lot. Now I'm totally speculating, so I'd love to be corrected by someone with actual knowledge of the engineering (or if someone has access to designs I'd love to review them)...

I think you already hit on it in your previous post. Looking at the curves you supplied earlier, there's a suggestion that for the Alexa the ADC is capturing in 16-bit and then the curve is implemented in 10- (or 12-) bit through digital processing.

I used Excel to generate the curve. If you have Excel, load values for X and Y in two columns, highlight the columns and select "Insert Chart". You'll be presented with different choices. You want a scatter plot (X vs. Y) with a line through it.

-

For some reason I can't edit my previous post anymore.

Ok so your example Ivon is 40,960 photons > 1023 as a start fair enough.

So moving on :

40,960 photons mapped to 1023 (1024-1, max value in 10bit)

20.480 to 511 (512-1..) because the sensor being in linear, if you divide the photons by to you also divide the value by two.10.240 to 255 (256-1..)5.120 to 1272.560 to 631.280 to 31640 to 15320 to 7160 to 380 to 140 to 120 to 110 to 15 to 1So it started to "clip" in the blacks at 80 photons, which is 9 stops under 40.960 photons.So theoretical-DR = 9 if Bitdepth = 10, doesn't matter the number of "photons" needed to make the sensor clip in the whites.Hey Tom,

I think you got it in your last post, but to be clear... bit depth does not determine DR, but there is absolutely a link between bit depth and a good image. I don't think any of us have argued otherwise. I think Carl even said, you can produce an image from a camera with 20 stops of DR with a 1 bit depth. It's an interesting image, but not a faithful image.

Your example shows a log curve where each value is halved from the previous one. Try a curve that doesn't do that. Something like this:

40,960 > 1023

20,480 > 942

10,240 > 862

5,120 > 781

2,560 > 700

1,280 > 619

640 > 537

320 > 455

160 > 370

80 > 281

40 > 183

20 > 55

10 > 27

5 > 13

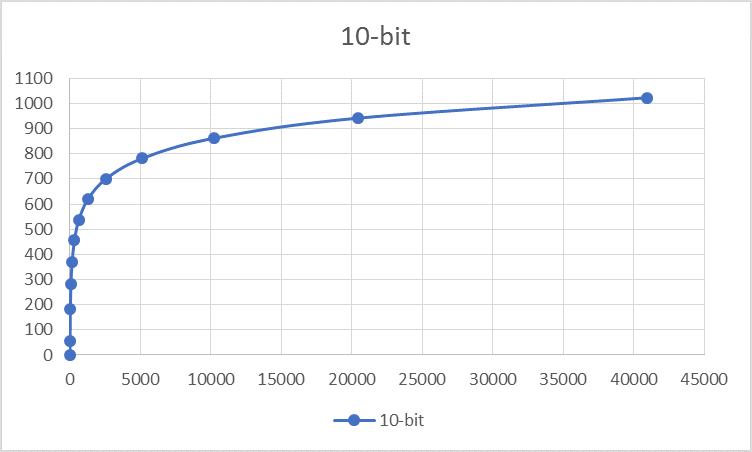

That's a curve that looks like this:

-

So I agree with most of what you said in this thread... except for the point 1) How could DR possibly be superior to the bit depth ?

We both seem to agree on how the light information is then stored in different values.

So going back to my "1B" graphs (the one that looks like a 2^x cuve)

Say the sensor stores data in 10b

Lets sayt its clipping point arrive when it receives 1023 photons.

1023 photons => mapped to the 1023 value (or 1111111111 as I didn't get how speaking in base 10 or 2 would change anything)

1022 photons => value 1022

...

512 photons => value 512. One stop captured so far. Ok interesting info : every camera sensor has half of its values talken by the brighest stop of light. A stop that hardly anyone uses (expect the ETTR geeks like me), fearing blown out highlights.

256 photos => value 256. Two stops captured so far/

128 => 128 => 3 stops

64 => 64 => 4 stops

32 => 32 => 5 stops. As we progress what we might call "low light stops" are getting fewer and fewer gray scale values for themselves. One reason why underexposing is a bad idea.

16 => 16 => 6. Ok only 16 values, at this point we enter the zone of the "probably unusable stops" (unless you're going for an image with deep blacks with no details)

8 => 8 => 7 stops

4 => 4 => 8 stops. What I might call the practical DR stops as the number of gray scales values per stops becomes ridiculous.

2 => 2 => 9 stops (theoretical stops, not anywhere near practical stops)

1 => 1 => 10 stops

then eveything will be mapped top the 0 value. And now even the theoretical DR stops.

That's why I maintain that DR < Bitdepth.

And then what a log function will do (with some tweaking)

is

1023 photons => value 1023

512 => 922

256 => 820

128 => 718

64 => 616

32 => 514

16 => 412

8 => 310

4 => 208

2 => 106

1 => 4

So we LOSE gray scales values in the highs (last stop before clipping being shrinked from 512 values to 102)

while shadows are'upscaled' (as one would upscale from 720p to 1080p).

As we can see in my 2B graph from the earlier post, Arri (and the others) don't go for the straight line (like in my example) but instead add a roll-off (I don't remember the word) to the shadows (stops furthest away from the clipping). That's probably because upsacling a stop that was ridiculously capture with 2 or 4 gray scale values (like my 8 and 9th stops in my example) probably looks awful when upscale to 102 values.

Plus then don't map their "1023 photons" to the max value, and that allow them to have different possibility of mapping (creating the ISO/EI option in camera)

Hi Tom,

Bit Depth will absolutely limit the usability of images as DR increases, so yes Usable DR < Bit Depth. However, it doesn't limit the sensor's ability to see a wide dynamic range, it simply limits how finely you can slice the DR up. 20 stops of range can be chopped up into 1024 slices or 3 stops of range can be chopped into 1024 slices. One image will look terrible, the other acceptable. If I read your analysis correctly, you are defining the limits of what acceptable slices might be, not range. To use your example, there's no reason why you couldn't map 40,960 photons > 1023 17,815 photons > 512, 5,017 photons > 256... 20 photons > 1, but the image may not look right. In a camera with 10 bit output, they don't stop counting at 1023, they set the white point at 1023 and the black point at 0 and map the intervals in between. That DR might be 8 stops, it might be 12 stops. The bit depth defines the intervals.

The OP was asking (two years ago!) how high DR was being fit into limited bit depth. The answer is log curves. At some point as DR increases, even log curves won't produce acceptable images if bit depth stays fixed, so they will be forced to increase bit depth.

My question for the experienced cinematographers, do we really need to be chasing higher and higher DR? Are you generally using all the DR your camera permits now when you shoot? I recently worked with a cinematographer who said, "if I can't see into the shadows, I throw light in there". Isn't that our job, to produce a pleasing image within a given DR?

-

Hey Carl, I can't see the pics.

Daytime Exteriors - Sun Placement

in Lighting for Film & Video

Posted

Guy, thank you for your very enlightening explanation. Are you able to share any production stills or frames from the finished film?