Tripurari Singh

Basic Member-

Posts

17 -

Joined

-

Last visited

Profile Information

-

Occupation

Other

-

Location

Washington, DC

Recent Profile Visitors

881 profile views

-

Color filters are typically deposited during manufacturing, but it's usually done at a separate facility from the one that produces the silicon sensor. It's a simple and inexpensive process. Our partner companies have handled this for us in the past. However, this time we're faced with the challenge of using new color filter materials. We've spoken with a leading manufacturer of color filters, and they don't anticipate any technical difficulties in producing the filters we need. Of course, they'll need a business case before proceeding, and that's what I'm currently working on. Our specialty is in image processing, including the critical demosaicking process. We've developed and tested this part through simulations. Because processing has been the bottleneck in the past, we believe we've de-risked the LMS design. The rest should mostly be straightforward engineering work.

-

LMS does have better sensitivity and dynamic range, which is why our eyes have so evolved. While camera vendors work hard to sense colors as our eyes do, I do not think evolution paid a high price to achieve our particular color perception. Had our eyes sensed colors the way today's cameras do, we would have been just as successful as a species as we are today. Cameras do not have higher metamerism than our eyes, just different. Evolution picked the LMS design for its higher SNR and dynamic range.

-

Thank you for your kind words. I have a concurrent thread running in reddit cinematography where people are dismissing the importance of color accuracy. We indeed do not have a camera yet and I am trying to figure out which markets to address. We picked the old Nikon D100 simply because it's simulation model was readily available for isetcam - the standard research software for modeling cameras. We compared the D100's quantum efficiency curves with a few modern CMOS sensors to ensure they were roughly similar.

-

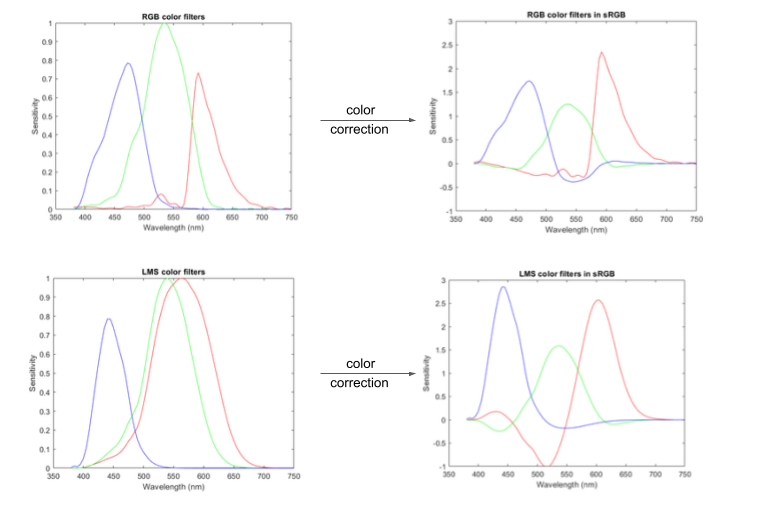

Hi Phil, You have made a couple of astute observations. It is indeed accurate that LMS filters have broad spectral sensitivities similar to the retinal cones, but their spectral sensitivities become narrow after color correction. RGB filters undergo a similar transformation, but to a lesser extent. I have attached a set of graphs that illustrate this transformation. Your observation regarding blue-purple confusion is also correct. Standard RGB sensors often struggle to accurately sense blue, unlike the LMS sensor, which closely resembles our eyes. The primary issue with existing cameras is their image processing pipelines, which cannot apply strong color correction without amplifying noise significantly. As a result, color filter designers face a tradeoff between color accuracy and SNR. However, our research has demonstrated that this is a false dichotomy.

-

Here's the motivation for our tech., if you don't want to click through to our web page: RGB filters were first designed decades ago and have only been fine tuned since. Back then we did not have powerful computers, or the image processing understanding, to mimic our eyes. The main problem in directly sensing accurate reds is that its frequency response is negative over a part of the spectrum. No physical filter can have a negative response, and the only way to achieve negative response is via color correction. Sensing LMS and then converting to RGB gives you the correct spectral response, but will result in high noise with the simple processing available decades back when the RGB camera architecture was designed. Modern computers and our algorithms make the LMS camera possible.

-

I am one of the founders of a startup that has developed the LMS camera. Unlike standard RGB cameras, the LMS camera mimics the spectral sensitivities of the Long, Medium and Short (LMS) cones of the retina, allowing it to capture accurate colors in any lighting, including mixed lighting. Additionally, it has a little over 1 stop dynamic range advantage and a little under 1 stop sensitivity/ISO advantage over the standard RGB Bayer cameras. Our team recently presented a paper on the LMS camera at the Electronic Imaging 2023 conference. You can find more information about our technology and the paper here. As the LMS camera has several potential applications, we are keen to gauge the interest of the cinematography community to see if this is an application we should target first. Please take a moment to check out our technology and let us know your thoughts.

-

Ha! You have quickly come to the crux of the matter. A system that relies only on filtration and modulation/demodulation will achieve 3K resolution only if high frequencies of color difference signals (G-R, G-B) decay rapidly. Such a system will exhibit cross-color and cross-luminance artifacts on saturated images rather than aliasing. These can be avoided using an adaptive system. If you read that paper carefully, you will find that color difference signals either: 1. have multiple copies and only parts of 1 copy is subjected to crosstalk depending on local features (ie is there a horizontal or vertical edge in the neighborhood) or 2. are centered about (pi, pi). This center is diagonally across from the luminance center (0,0). The "distance" between the two centers is sqrt(2) units which is adequate separation for a non-astigmatic image. 2 is not a problem, and 1 can be tackled (with varying degrees of success) by adaptive systems. The bottom line is that the Bayer pattern is a devilishly clever system that is still not fully understood. I doubt if the inventor himself appreciated the cleverness of his invention. I have yet to see an upper bound on the resolution that can be extracted from it, and as demosaicing algorithms improve more REAL picture information is being extracted. Tripurari PS: Thanks for your wonderful question

-

You don't need to account for the gaps between R, B pixels when designing the OLPF. See E. Dubois, “Frequency-domain methods for demosaicking of Bayer-sampled color images,” IEEE Signal Processing Letters, vol. 12, pp. 847-850, Dec. 2005. doi: 10.1109/LSP.2005.859503 for the spectrum of a Bayer image. Your OLPF just needs to keep the luma and chroma separate. Tripurari

-

I don't see why you are worrying about the Nyquist theorem at all. Nyquist-Shannon sampling theorm is just that a sampling theorem. You are trying to relate the DISCRETE signal from the sensor to the DISCRETE signal of the display without any re-sampling. Here's an alternate argument - Nyquist theorem has two variants: 1. sampling a continuous signal to obtain a discrete signal 2. re-sampling a discrete signal to obtain another discrete signal You can't be talking about 1 because continuous signal is measured in cycles (or lines) and not pixels. Sampling a continuous signal of 4K PIXELS is a meaningless statement. 2 is also ruled out because no one is forcing you to resample. A third way of looking at it is that the sampling theorem isn't merely about sampling, rather it places a fundamental limit on how much info. a discrete signal can hold. The amount of info a 4K-sample signal can hold is the same at the sensor as it is at the display. There is no way to stuff more info into 4K at the display by using higher resolution sensors. (In practice this is not true because of non-ideal LPF, hence the popularity of oversampling) (There is also the issue of the reconstruction filteration which is usually left to the human visual system) -Tripurari

-

Fair enough, though I'd put the figure closer to 3K than 2K assuming that color difference signal signals have roughly half the frequency bandwidth of luminance or any of the primary colors. I don't know what kind of software codec RED is running but it is certainly possible to apply the necessary filters in reasonable time. You can do it really quickly if you use the graphics card. The big advantage of a RAW workflow is that you can do all of this off line. As for the viewfinder and HD-SDI you can get away with lower order filters since they are at half resolution. Again I don't know what RED is doing, but realtime processing using FPGAs is not impossible. -Tripurari

-

Phil's arguments seem "straightforward" because of his silent assumption that data and information are the same thing. -Tripurari

-

Phil, I wouldn't be so alarmed over this situation, for the simple reason that no one is likely to get something other than what they expect. Technically savvy people would know about the Bayer issue, while the technically disinclined will expect RED's picture to be similar to a DSLR/Digital Still Camera with similar pixel count. All thanks to aggressive marketing by digital still camera manufacturers. I cannot imagine a realistic situation in which someone would feel cheated. Tripurari

-

When the output resolution is also measured in pixels and not cycles? No! If the desired output resolution is 640x480 you only need a sensor with 640x480, ignoring practical issues such as a non-ideal Optical Low Pass Filter. Tripurari

-

There's a difference between data and information. The Bayer Filter throws out 2/3 of the data, but how much information does it lose? Image data has a lot of redundancy, as evidenced by its high compressibility. Coming back to sensors, square lattices have greater diagonal resolution than vertical/horizontal by a factor sqrt(2). If you are interested in a non-astigmatic image (a reasonable goal, especially since you seem to be interested in worst case analysis), this extra data capacity can be used to convey color information. Starting with a non-astigmatic image, you can use a Color Filter Array (CFA) to modulate chroma info into the (empty) diagonal high frequencies. The demosaic algorithm can easily heterodyne this chroma info back to baseband. If you work out the details, you will find that more than 2K worth of information can be retrieved. The gist of this long drawn argument is that the sensor actually retains a lot of information and is not mostly synthetic data being invented by software. Tripurari