Search the Community

Showing results for tags 'RAW'.

-

Digital cameras can do some amazing things nowadays considering where they were just even five years ago. One thing I sometimes struggle to understand is how these newer cameras with 13+ stops of dynamic range are actually quantizing that information in the camera body. One thing we know from linear A-to-D quantization is that your dynamic range is a function of the number of bits of the converter chip. A 14-bit ADC can store, at best (and ignoring noise for the moment), 14 stops of dynamic range. However, when we do introduce noise into the mix (sensor, transfer charge, ADC, etc.) and linearity errors, there really isn't 14 meaningful stops of dynamic range. I did a lot of research on pipeline ADCs (which I believe are the correct type used) and the best one I could find, as defined by the measured ENOB (effective number of bits), was the 16-bit ADS5560 ADC from Texas Instruments; it measured an impressive 13.5 bits. If most modern cameras, Alexa especially, are using 14-bit ADCs, how are they deriving 14 stops of dynamic range? I read that the Alexa has some dual gain architecture, but how do you simultaneously apply different gain settings to an incoming voltage without distorting the signal? A pretty good read through regarding this technology can be found at this Andor Technology Learning Academy article. Call me a little skeptical if you will. Not to pick on RED, but for the longest time, they advertised the Mysterium-X sensor as having 13.5 stops (by their own testing). Of course, many of the first sensors were used in RED One bodies, which only have 12-bit ADCs. Given that fact, how were they measuring 13.5 in the real world? Now, with respect to linear to log coding, some cameras are opting for this type of conversion before storing the data on memory cards; the Alexa and cameras that use Cineform RAW come to mind. If logarithmic coding is understood to mean that each stop gets an equal number of values, aren't the camera processors (FPGA/ASIC) merely interpolating data like crazy in the low end? Let's compare one 14-stop camera that stores data linearly and one that stores data logarithmically: In a 14-bit ADC camera, the brightest stop is represented by 8192 code values (16383-8192), the next brighest is represented by 4096 code values (8191-4096), and so on and so forth. The darkest stop (-13 below) is only represented by 2 values (1 or 0). That's not a lot of information to work with. Meanwhile, on our other camera, 14-stops would each get ~73 code values (2^10 = 1024 then divided equally by 14) if we assume there is a 14-bit to 10-bit linear-to-log transform. As you can see here, the brighter stops are more efficiently coded because we don't need ~8000 values to see a difference, but the low end gets an excess of code values when there weren't very many to begin with. So I guess my question is, is it better to do straight linear A-to-D coding off the sensor and do logarithmic operations at a later time or is it better to do logarithmic conversion in camera to save bandwidth when recording to memory cards? Panavision's solution, Panalog, can show the relationship between linear light values and logarithmic values after conversion in this graph: On a slightly related note, why do digital camera ADCs have a linear response in the first place? Why can't someone engineer one with a logarithmic response to light like film? The closest thing I've read about is the hybrid LINLOG Technology at Photon Focus which seems like a rather hackneyed approach. If any engineers want to hop in here, I'd be much obliged--or if your name is Alan Lasky, Phil Rhodes, or John Sprung; that is, anyone with a history of technical knowledge on display here. Thanks.

-

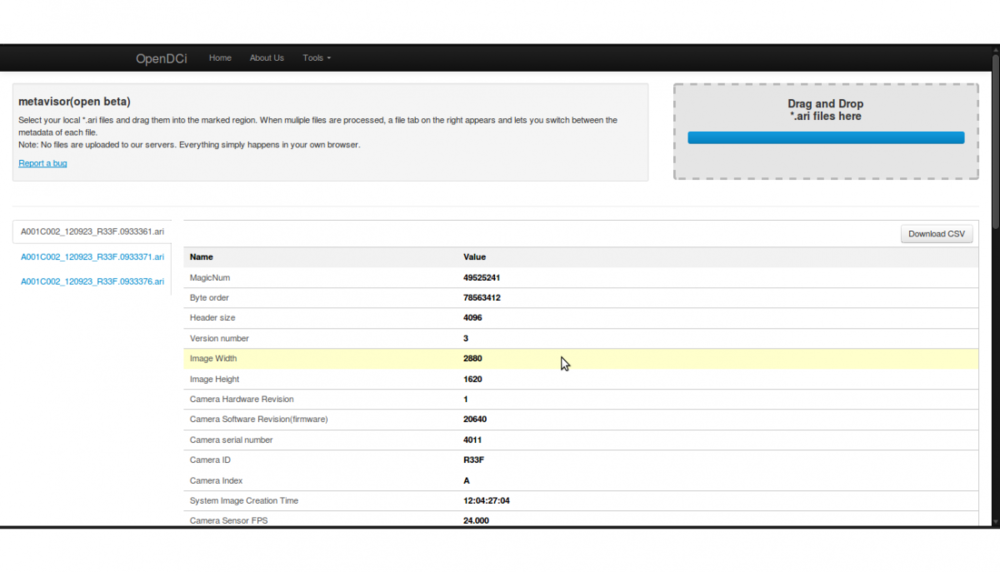

As a side project we have created a little free tool called "metavisor" to directly view and extract metadata of Arri Raw files inside your web browsers with simple Drag and Drop technology Link to metavisor: metavisor I would love to get some feedback from the pros here: What you think about the tool? What would you like to be improved? How important is viewing and export of metadata to you? Is the current tool with installation (Meta Extract) provided by Arri too cumbersome to use? What free webtool would you like to see concerning production / postproduction / vfx ? The tool is still in beta phase. We are also planning to extend the functionality to the other capture formats the Alexa supports, RED, Sony's cameras and connect the vfx/lens data information to our database and make it available to the digital workflow community. Also if you ever imagined a nice tool that everyone should be able to use for free in our industry - just drop us a line (metavisor@nablavfx.com). Usually people with good ideas and interesting problems are industry professionals ;) Big thanks to everyone giving some feedback ! We really appreciate your time. Jatha --- Announcing - metavisor - http://www.opendci.com/app/metavisor Extract Arri Alexa Raw metadata with this simple free web tool ! Quickly view metadata online of Arri Raw files (*.ari) inside your browser, with simple Drag and Drop. Download the metadata in csv or json format. -- Jathavan Sriram Co-Founder, CTO nablavfx R&D|3D|VFX h: http://www.nablavfx.com m: contact@nablavfx.com Google+: https://plus.google.com/104702076022464846295 LinkedIn: http://www.linkedin.com/company/3165753

-

I'm a DP and lighting tech by trade, so forgive me for what seems to me like kind of a noob question. I'm trying to rough out a round trip (shot-edit-vfx-color) for a short trailer with some significant CGI work--basically trying to knit together the resources of 2-3 freelancers, keeping image integrity, maintaining a reasonable turnaround time, and all the while keeping us from pulling our hair out. We'd be shooting Epic raw. I will have a post supervisor/coeditor, but I want to make sure I have a little more background before we sit down to do this for real. The post sup/editor is on Mac, and I'd likely be doing the rough assemble in an offline edit--on my not-yet-built windows machine with Adobe premiere (or maybe even lightworks--not that I need to make this more difficult by learning new software). I suppose the picture edit goes back to the post sup's machine to conform, and then from him, to the animator for compositing. Or does it? Our animator is in Windows 7. She models in Maya, and composites in AE. While we'll be delivering in 1080P, I'm really pushing for an all 4k workflow if possible. Then (I think) it has to go back to the post supervisor's Mac pro for final color, and for the deliverables (a youtube video, and cDNG). --after speaking to the animator, I realized I don't know how to deliver footage to her for compositing. The post sup is pretty much a one man editorial department for a small ad company, so he doesn't have to bridge a bunch of contractors with different filesystems or codecs in his day to day. A simple flowchart or list with the important bulletpoints would help, and I'll reveal my ignorance through follow up questions as necessary. Sorry again if this is nonsense, I'm a lot better with things that have three wires and a halogen bulb. Best, Matt

- 4 replies

-

- round tripepic

- raw

-

(and 1 more)

Tagged with:

-

Hello there, I am a freshmen undergrad in film school and recently got the position to work as a DIT and editor on an upcoming short. The short is a collaborative effort of one of our school clubs where we managed to get together to funds to shoot with an epic. During the shoot, which is coming up this spring, I am to apprentice a professional DIT and learn the ropes. Eventually taking over once I am comfortable with the procedures and so forth. I am also going to be grading and editing the RAW footage. So prior to when my apprenticeship begins I would like to have a firm knowledge of what I am getting myself into. So I was curious if anyone had any articles regarding the DIT workflow and responsibilities of the job. Also I am looking for any articles and info on grading RAW footage which I have experience with in terms of stills, and the RAW editing workflow. Despite going into this without any experience in being a DIT, I am a very adequate editor in Final Cut and Premier. Just to let you know I’m not entirely unqualified for the position. Summary: I am looking for all information, advice, and articles regarding being a DIT to a RED Epic, and grading and editing RAW footage. Thanks!