silvan schnelli

Basic Member-

Posts

41 -

Joined

-

Last visited

Profile Information

-

Occupation

Student

-

Location

Switzerland

Recent Profile Visitors

The recent visitors block is disabled and is not being shown to other users.

-

Why does the film scan DPX appear so contrasty and dark

silvan schnelli replied to silvan schnelli's topic in Post Production

@Robert Houllahan Thank you for the simple and quick reply. It is something I only realized later through trial and error. Nevertheless, for some reason the footage still appears a bit off, but changing from log to linear fixed the issue for other film footage I have downloaded. -

I downloaded 5213 35 film scan DPX footage and ARRI XT footage from https://www.cinematography.net/Valvula/valvula-2014.html. My goal is to compare the footages and put them through a post pipeline that I am amending and tinkering with. However, when I loaded the two DPX files into Nuke, I noticed that the film scan is much darker and slightly more contrasty than the Alexa footage. I then applied a OCIOFileTransform to both, a LogC2 to rec709 conversion for the alexa and davincis Kodak 2383 PFE for the film scan. The colours look great but the film scan is much more underexposed. On the CML website the footage looks relatively the same exposed, but for me the film looks much more underexposed. What am I doing wrong or misinterpreting? ARRI XT (16bit) with classic LogC2 to rec709 and Kodak Film with Davinci 2383 PFE

-

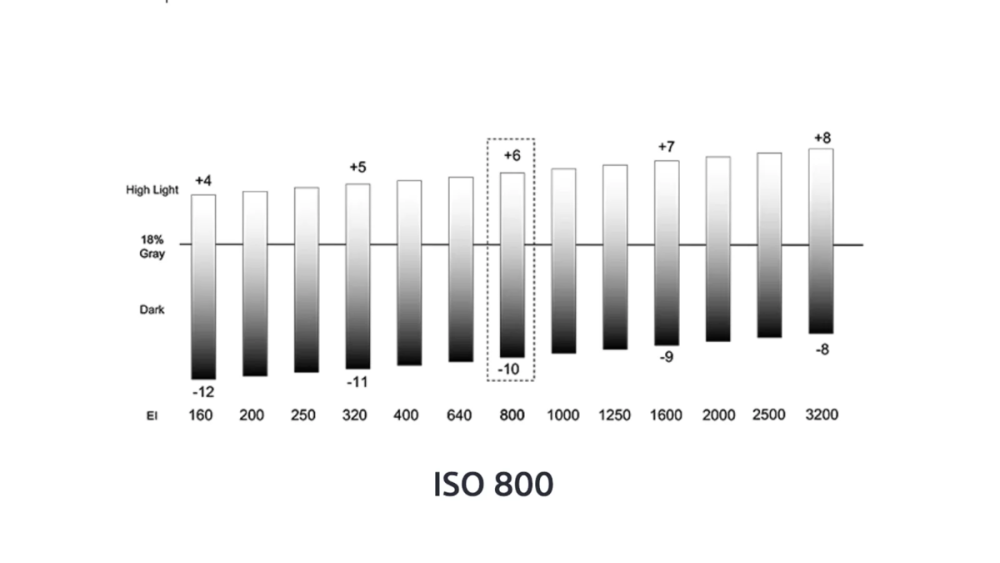

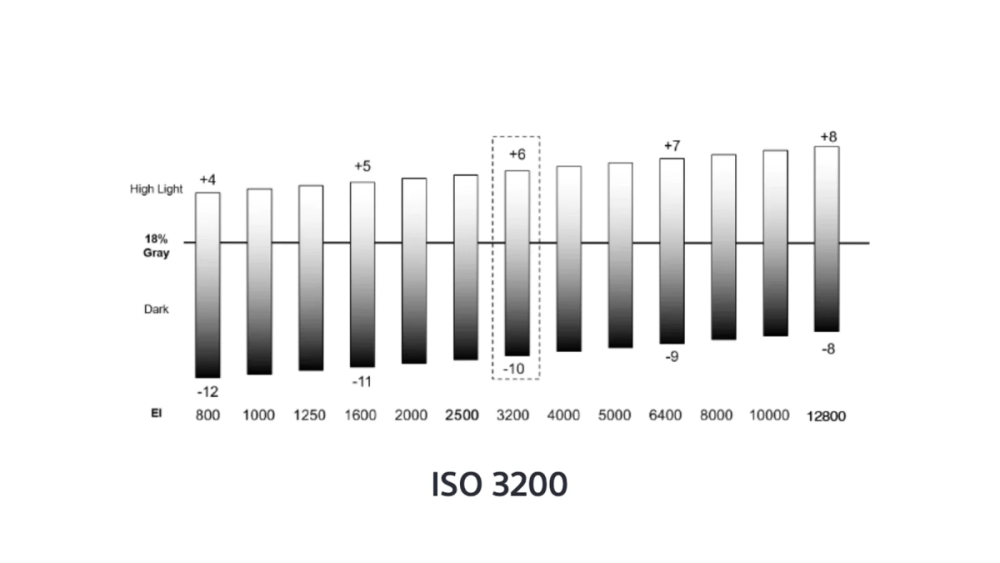

I am unable to understand why, let’s say the Sony Venice at EI 3200 with a native setting of 3200 would have less noise than EI 3200 at native 800. I have read the whitepaper https://www.photonstophotos.net/Aptina/DR-Pix_WhitePaper.pdf , but to my understanding, the paper talks more about the benefit of increased dynamic range by running a second capacitor in parallel. How is it possible for cameras with a second native ISO to be more sensitive (higher conversion gain) and have better SNR with less incident photons. All whilst not making the lower ISO rating obsolete? I feel like this would also suggest that I would need to have two separate light meter calibrations for the two ISO settings, if one setting converts x amount of photons into x exposure and the other converts y amount of photons into the same x exposure. EI latitude representation of the Sony Venice 2

-

Recreating a partial window reflection effect

silvan schnelli replied to silvan schnelli's topic in General Discussion

@David Mullen ASC Thank you for the tips. I will definitely have to put this into practice. -

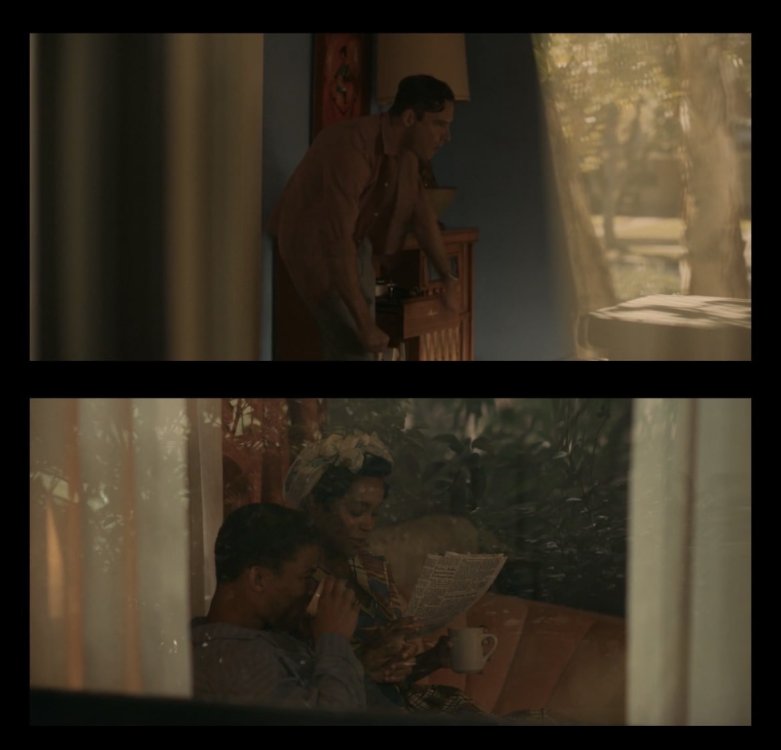

How could you recreate these two shots from Lessons in chemistry, photographed by Jason Oldak. In the first one it seems that the reflection from outside the window is only present where the curtain is. I’m guessing this is because it blocks out the light incident from the room going out, which then allows for the reflection to be more present relative to the darker background. In the second one the reflection looks like a superposition, which may perhaps just be a balancing of inside and outside lighting. However I am curious about other thoughts, opinions, tips and experiences in recreating a frame like this. Thank you

-

I'll answer my own question in case anyone reading this also wants to know the answer. I am still not certain why Steve Yedlin calibrates his light meters (I'll have to re-read his post most carefully). However, I have found out that from what I have read, ISO values differ slightly from one manufacturer to the other. This is perhaps also another reason why the term Exposure Index is used instead of ISO (besides the fact that a cinema camera's ISO is always fixed). So an ISO of 100 to a Sekonic lightmeter means something different than an ISO of 100 to an ARRI Alexa, which both could differ from what a Sony Venice perceives an ISO of 100 to be. Hence by calibrating our light meter, we can ensure that the EV calculations are calibrated to accommodate the way the camera processes the exposure. This type of calibration hence doesn't alter the Luminance or Illuminance values obtained when measuring Cd/m^2 (foot-lambert) or lux (foot-candle). Difference between Exposure Index (EI) and ISO https://125px.com/docs/techpubs/kodak/cis185-1996_11.pdf

-

Camera Dynamic Range (DR) vs Display

silvan schnelli replied to silvan schnelli's topic in Camera Assistant / DIT & Gear

@Nicolas POISSON Thank you for the thorough answer. Yeah with being indirectly tied to bit depth, I was referring to how even in log encoding, for example, there still needs to be enough bits to be assigned per stop value to ensure an image with minimal posterization. The part about the DR definitions is also very interesting, I wasn't aware of the latter two, so I'll have to do more reading on them. I especially appreciate the last part of your response, so would you say it would be fair to imply that by compressing the highlights the "gradation in the luminance stops are just not be represented faithfully" (relating to the wording of my original question)? -

Camera Dynamic Range (DR) vs Display

silvan schnelli posted a topic in Camera Assistant / DIT & Gear

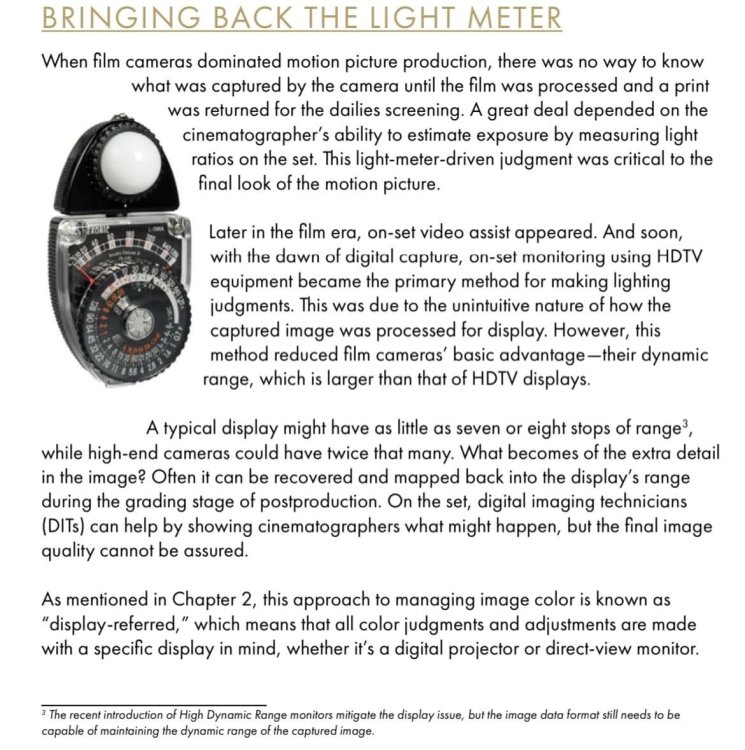

I recently read the ACES Primer, where one of the headings was “bringing back the light meter”. My understanding on this was that often scenes would be lit to accommodate for the output device (display referred) and would hence bottlenecking the look of the film, by not using the cameras full DR potential, especially (or at least) with respect to future exhibition. It made me think about a question I have had for a while, and that is in regards to the lighting of a scene and placing of the highlights. If the camera has 16stops of DR but the displayed image (exhibition) will be in SDR with a lower bit depth (which to my understanding is indirectly tied to DR), does it make sense to place information into the upper and lower bounds of the cameras DR. What will happen to the highlight information in the 14/15/16 stops of the image, if the exhibition can only display a DR of 10 or even 8 stops; will they clip? Will the gradation in the stops luminance just not be represented faithfully? Or does it make more sense to light a scene in a way that keeps the information within those limited 8 / 10 stops. But then what is the point/use or happens to the information in the higher stop values? (I am aware that important information is always best placed in the gamma of the characteristic curve.) I hope my question makes sense, I have yet to find an answer that clearly paints the reasoning to me and would greatly appreciate anyone willing to explain it to me. Thank you for your time -

I was attempting to re-read Steve Yedlin's #NeryFilmTechSuff on Light Meter Calibration, but still have a lot of questions that made it difficult for me to even understand what was being talked about. Perhaps these questions are addressed in the first version he published, but I cant seem to find it. My question is: Why is he changing the calibration constants from his light meter and how does this differ from the compensation settings (which I think he also changes). I am quite certain I must have misinterpreted many aspects of the text, but this is the first time I've heard of calibrating light meters. I am assuming that changing the calibration constant with respect to the EV formula will result in different calculated/suggested camera settings, but shouldn't doesn't change the illuminance/luminance being measured. Yet I still don't know why one would or maybe more appropriately phrased, should, do this? He does mention something about personal preference, so my best guess is that it might have something to do with that. If it is based on personal preference my next question would be how does one even figure out their preference. I've read that different manufacturers or even light meters have different calibration constants, but I'm assuming all have the same goal of obtaining correct exposure through the calculated readings, so why change this calibration number? Thank you for taking your time reading this question and apologies for the repetitive structuring of it. Steve Yedlin's page that I am referring to: https://www.yedlin.net/NerdyFilmTechStuff/ExposureEquationsAndMeterCalibration.html

-

@Albion Hockney@David Mullen ASC Thank you for the great replies. I know this has been touched on before, but I am still a bit confused about: If I intend to have windows with no details in them, would it be okay to just clip the windows during acquisition or should I still try to obtain information and do it in post. I am wondering because I don’t understand how I would be able to clip the windows in post, without affecting the exposure of the whole scene. I also understand that, to my knowledge, the value of keeping information includes, having better control of the roll off and that it can avoid the problem where different RGB values saturate at different levels, which can lead to hue shifts. So what is the best method ?

-

I recently just watched Huirokazu Koreedas newest movie Monster, in the cinemas and was blown away by both the story and naturalistic beauty. I was especially engrossed by a kind of golden glow that seemed to be particularly visible in the highlights. I tried to attach screen grabs I got from the trailer, but it definitely doesn’t do it justice compared to the image I experienced in the cinemas. I'm sure that if you have seen the movie, its evident what glow I am talking about. My question really being how they achieved the glow, the images didn't seem particularly soft, in terms of acutance or contrast or resolution, but then again its tough for me to judge just like that. It also got me thinking about overexposed lights or especially windows (as they are very large), or in this example the highlights of the fish tank. Do people usually expose the frame to clip the windows or always best practice to keep information in the windows, and then do the overexposure in post (even if the final shot should have overexposed windows)? If the latter is the case, how would you overexpose it in post without affecting the other highlights? Thank you for your time

-

DOF in practice and Hyperfocal distance

silvan schnelli replied to silvan schnelli's topic in Lenses & Lens Accessories

@Joerg Polzfusz Thank you for the links, although most of those do seem to be catered mainly to photography. However, I did just see, which for some reason I didn’t notice before, that dofmaster does have cinematography section in the selection tools. In regards to the Alexa mini which has sensor dimensions of 28.25mm x 18.75mm (open gate) and a Diagonal 33.59mm, what Is it in terms “of x/y inch sensor” notation and why is it that? From what I have seen a 2/3 inch sensor in the cinema section, gives a coc of about 0.02mm, which to my knowledge is the estimated coc of the Alexa mini in terms of cinema viewing. -

DOF in practice and Hyperfocal distance

silvan schnelli replied to silvan schnelli's topic in Lenses & Lens Accessories

@David Mullen ASC I suppose it would be a nice concept if one were to be able calculate the hyperfocal distance for a camera, but I guess there are too many unknown factors especially in the exhibition portion. I do also feel like focus peaking on monitors, which is what I assume you are referring to, must have a similar issue. I definitely don’t know how peaking is calibrated (user can also change the sensitivity) or engineered, but I can imagine that when monitoring DOF on a monitor or even a larger display, it will always be shallower on the big screen. However, as you did mentioned before sometimes the DOF is calculated. So I am wondering which COC values would be used, would you use the photography chart values or are there other values? -

How do and how accurately do cinematographers pay attention to their depth of field when filming? Seeing as depth of field and the intertwined concept of circle of confusions is very situationally based and dependent on very delicate things like visual acuity, viewing distances, print sizes/projection sizes and much more, how diligent are filmmakers in regards to DOF? How would you calculate the Hyperfocal distance for example? Are there DOF charts or do some cinema lenses have an integrated feature to show this or do people perhaps manually calculate values and if so with what value do they use for the COC? thank you for your time

-

Panning around the Entrance Pupil (Nodal Point)

silvan schnelli replied to silvan schnelli's topic in General Discussion

@Daniel Klockenkemper Thank you for the great response.- 2 replies

-

- entrance pupil

- movement

-

(and 1 more)

Tagged with: