-

Posts

960 -

Joined

-

Last visited

Everything posted by Perry Paolantonio

-

Dye Fade Tool in Diamant Restoration Suite

Perry Paolantonio replied to Todd Ruel's topic in Post Production

I can only speak to our experience, which was absolutely abysmal. It managed to crash so consistently in such a way as to obliterate tens of hours of saved work. When it would crap out, it would take the project database files with it, so there was no way to recover work you had done (and saved). Truly awful. It was also massively resource-intensive, in that almost any fix had many, many tiny files associated with it. Projects that consisted of 6 Quicktime files might balloon to several hundred thousand tiny little files, which made backups painfully slow. It also left a lot of that behind in the folder, so when it crashed, new fix files were created alongside those, resulting in way more junk. Doing an LTO backup of a PFClean project was an all day affair, even though it wasn't that much data - just a truckload of tiny files that wreaked havoc on the filesystem. We used it on Windows, which at the time was the preferred system. And we built our machine to their spec, which they then changed on us and told us they wouldn't support (even though the system we built was the one they recommended for the version we were running). It was really something. I'll never buy anything from them because of the way they treated us and will yell from the rooftops any time I hear of anyone else thinking of it! I will say the "fix frame" (I think it was called) tool worked some kind of crazy miracles on footage we had that had greasy fingerprint marks only in the blue channel. I don't know what it did, but it wiped that out instantly, and those fingerprints were on either side of every splice in a feature. Other than that - junk, as far as I'm concerned. -

Dye Fade Tool in Diamant Restoration Suite

Perry Paolantonio replied to Todd Ruel's topic in Post Production

MTI's software is great for manual work. They're way behind the curve on the automated side of things though. Phoenix Dry Clean is really good at this part, but the licensing sucks. Manual cleanup on MTI is miles ahead of anyone else and once you get into a rhythm, you can work really move fast. But because the app only works with DPX files, it's a bit of a clunker and requires a ton of disk space. Most of the other applications work with ProRes or DPX (or EXR, or other formats too), and since the vast majority of the work we do comes in as ProRes, with only a few exceptions, that's really important and has kept us from going back to MTI. If this has changed I'm all ears! I learned on MTI Correct 20+ years ago. The fact is, there is no single tool that does everything well. If I had to pick two to use on the regular it'd be MTI and Phoenix, probably. With our permanent (older) Phoenix Touch license, we can do almost everything we'd need to do, but the full version of Phoenix gets you access to the grading tools, which are useful for things like scratch concealment. PFClean is utter garbage and the company behind it is even worse. They won't admit when they have problems and they won't stand behind their software. We lost tens of thousands of dollars in completed work because of the crash fest that was PFClean. absolute nightmare. The only serious contenders here are MTI, Phoenix, and Diamant. -

Dye Fade Tool in Diamant Restoration Suite

Perry Paolantonio replied to Todd Ruel's topic in Post Production

They sell it outright (permanent license) and it's about $20k. The annual fee Todd is talking about is probably the support contract (which presumably comes with a software update, if available), which I think is in the $3k range. This is a little different than an annual "subscription." We looked into getting it in 2016 and checked in on the pricing recently because I'm not very happy with the direction Filmworkz (Phoenix) is heading with their subscription-only pricing. In a subscription model you lose the software when you stop paying. In a permanent license model with a support contract you buy it outright but get updates and support for your annual fee. Diamant has a physical USB dongle, but they do have a license server, so they must offer a monthly version as well (which a lot of these applications do, in case you only occasionally use them). -

Film Development and Scanning in Europe

Perry Paolantonio replied to Kevin Staub's topic in Post Production

MTI Film isn't a lab, it's a software company -

You're making my point here. Our ScanStation is now 12 years old. It was the first one Lasergraphics shipped - So old it doesn't even have their logo branding on it because I told them I didn't want to wait for them to get that and apply it. I was delivered as a 2k, 8mm/16mm only scanner. And precisely because it's modular and based on a PC we were able to continually upgrade it so that it can now scan 12 different film gauges/formats, on the same basic chassis we bought in 2013, from 8mm through 35mm. All of these upgrades were done in-house, and all of them took about an hour. It wasn't hard. What on earth is a Windows Specialist? Our ScanStation was upgraded to 6.5k as soon as that camera came out and it came with a turnkey PC. We haven't touched Windows on that machine since then and we use it daily. I think that was, what? 4 or 5 years ago? Windows is a pig, but it works just fine and isn't that complicated or hard to use. The vast majority of computers in business are Windows. Is it as nice to use as a mac? not really, but it's not exactly exotic or an unknown quantity. No. First - the ScanStation is running a $400 ASUS gaming motherboard, and a Core i7 CPU, I think. The dual GPUs in it weren't even that high end when it came out. GTX 1060s, if I'm not mistaken. upper mid-range, at best. Secondly, any PC you buy - mac or windows - is going to be superseded by new tech in two years. But if you want to talk about high end windows PCs - the machine we run Phoenix in cost about $5000 to build from scratch and it took me a couple hours to put together. It's a 32 core Threadripper in a Supermicro server motherboard. I built that 2 years ago and it's still in daily use restoring 6.5k scans. This is a red herring that has nothing to do with the FPGA. The BMD scanner is fast because the scanner is moving the raw, un-debayered image over thunderbolt, and it's being debayered afterwards ...in the PC. It's not the same in terms of bandwidth, as moving that much uncompressed (DPX, EXR, etc) data over the same pipe. Different thing entirely. I never said anyone should or would roll their own. I'm using my *actual* experience with this stuff to try to explain to you how it works. But twist my words if you must. As for the timeframe, yes it's taken a long time - that's because I've been running a business and doing this stuff when I have time, which there isn't a lot of. My actual labor hours over the past few years on the coding part of the process are probably less than 200. And that's for the whole application, which includes the user-facing front end, all the image processing, all the communications with the frame grabber, the motion controller, and the lighting setup. This is hardly any time at all, it's just spread out over a longer period because of external reasons. Um, actually they don't and for certain BMD is not. I am not familiar enough with the inner workings of Arri and Scanity to say. Spirit hasn't been made for years so really shouldn't factor in. My understanding of the Arriscan XT is that much more is done on the computer than the original version of the scanner, which had a lot more proprietary image processing hardware. Nobody wants to have to make that stuff if they don't have to - it's expensive and takes time and resources so why re-invent the wheel when a software-based solution using more generic hardware is readily available? Lasergraphics and Kinetta both send raw data to the PC that is processed - not a finished image at all. In fact, both are sending the raw data from the camera, which is why they're able to do it over relatively low bandwidth connections. BMD is NOT sending a finished DPX or TIFF image over thunderbolt from the scanner - it's sending camera raw and debayering it in the computer and that's how they're working at high speed.

-

FPGAs are used in cameras because you can't fit a computer in a camera. An FPGA is a chip, not a full blown system, which is why they're used in embedded systems. IMO, using an FPGA in the scanner is a dumb design decision, not a smart one. It's inherently limiting, and doesn't allow you to adapt to change as easily. Everything about an FPGA is custom and proprietary. With a PC-based system using off the shelf parts, yes, you might need 2 GPUs but I mean, who cares? You're connecting the scanner to a computer anyway and you have built-in flexibility to upgrade capabilities at any time. With an embedded processor you do not. What does it matter if some of the work is done in-scanner or external? the end result is the same. I don't think you understand how easy it is to do stuff like machine vision perf registration. Using open source tools like OpenCV, on modest hardware, you can do two or three image transforms to locate and reposition the frame in a matter of milliseconds, on images that are 14k in size. The PC running our scanner was built in 2018 and can do this on 14k images in under 100ms. And that's on the CPU, by the way, no GPU involved in our setup. It'll be faster with a newer machine, which will happen when we're done coding and know what bottlenecks we need to address. Most frame grabber PCIe boards offer FPGA equipped versions of their hardware, which have the ability to do some image analysis/machine vision stuff on board. So yes of course this is all possible to do inside the scanner as well using the same chips. But to do that requires substantial programming resources, and you are limited by the capabilities of the FPGA you choose. The cards we are using in our 70mm scanner can do this. I looked into it, and honestly it wasn't worth the effort to have to program the FPGA, because that locks you into using that card manufacturer's API. Instead, we are using generic camera communication protocols (which are built into the card's drivers), and would let us swap out the camera and frame grabber for any other one that uses GenICam. Which is to say, a lot of machine vision cameras. It doesn't limit us to a particular interface, or a particular brand of frame grabber, or a particular brand of camera. Right now we use CoaxPress. We could swap that camera and frame grabber for a 25GbE setup and not have to change a single line of code.

-

A full immersion wet gate won't fill in the really deep scratches but absolutely will conceal most of the others. Scratches are visible because photons directed in a straight line (collimated) hit the edge of the scratch and refract, which reveals the scratch on the imaging sensor on the other side of the film. This is why Perc and other liquids with the same refractive index work well with collimated light sources in liquid gates: they fill the scratch and allow the photons to pass straight through as if the edge of the scratch was never there. But if the scratch is very deep, and a lot of scratches are, you're still going to see them even with a liquid gate. They will be reduced in severity, yes, but it's not going to eliminate them. And of course, as you point out, this only works on the base side, not the emulsion, and you need to use the right liquid, not something like Isopropyl alcohol as some "wet gate" scanners do. Really diffuse light goes a long way towards concealing superficial scratches, as I said above. Way back I scanned some film on our Northlight, which wasn't particularly diffuse lighting, and on the ScanStation which uses diffuse lighting, and the flurry of superficial base scratches disappeared on the scanstation but were plainly visible on the northlight scans. Not sure if I still have those files but if I can find them I'll post them. It was like 10 years ago when I did that test. Back to this thread though: the BMD Cintel doesn't use a collimated light source. In fact, I can't think of any recent commercially available scanners that do - they're all diffuse now, I think, so they all do a decent job of masking light scratches, though I'm sure some better than others.

-

This is more a function of a diffuse light source, which most modern film scanners can do. https://lasergraphics.com/scanstation.html If you scroll down to "Stable Diffuse Light Source" it's explained how this works. It negates the need for a wet gate in most cases, because a wet gate does effectively the same thing for superficial scratches, but only if the light source is collimated. I don't think the BMD Cintel has ever had a collimated light source, so this probably isn't a new feature, but maybe a feature they decided to show off at the show?

-

Scratch reduction has no business happening *during* the scan. It's an inherently destructive process, no matter what algorithm is used and it will leave artifacts. Presumably, they're drawing on the IP for the Cintel Oliver, which did real time scratch reduction and dustbusting - very poorly, I might add - in hardware. Even the best high end restoration software can't completely get rid of all scratches without significant manual intervention, and those are using much more powerful desktop computers, not just any old mac you can plug into the scanner. And those systems can't do it in real time. I didn't go to NAB this year, but if it looked good on the show floor, odds are they demonstrated a best case scenario. I remember when the BMD Cintel first came out, I pointed out all the FPN in the scans on the show floor at NAB to their engineers. The next day they were using a different reel of film.

-

Metropolis Post in NY has a 4k Director. To be honest, I'm not sure you're going to see that much difference with a third flash, but I'd be curious if you do some tests if you could post the difference between 2 and 3-flash scans on that machine of your footage.

- 12 replies

-

- 1

-

-

- lasergraphics

-

(and 2 more)

Tagged with:

-

Sorry to hijack your thread. I just wanted to make it clear that what Tyler posted that MP4=H264 was not factually correct, and that MP4 has had a long history of supporting other codecs. But apparently I know nothing after 30+ years of working with digital media. Go figure. But to respond to your original question - you might look at using the x264 encoder. I wrote my own front end app, years ago, for in-house Blu-ray encoding that basically fed batches of files to the command line x264 encoder. We used it for a decade for encoding commercial Blu-rays and the quality was miles ahead of anything that could be done with the very expensive Blu-ray encoders of that time (CinemaCraft, Sonic, etc). It's a *very* good encoder, and it handles film grain pretty well. We had a client who released a lot of 80s horror films, which had very crunchy grain and we regularly made 20mbps encodes that both maintained the grain structure, and lacked compression artifacting. You couldn't do that with any of the other encoders, they just weren't good enough.

-

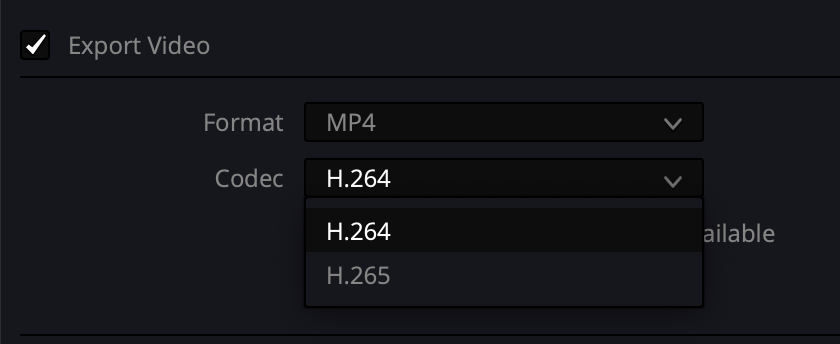

It's really tiring, the way you try to derail conversations like this when someone calls you out for being wrong. So I'm sorry to everyone reading this if it sounds like I'm being a jerk. People read things in a forum like this, and assume they're correct, because most of the folks posting here know what they're talking about. Incorrect information has to be corrected or else it flourishes. I mentioned JPEG2000 as an example of a codec MP4 supports that's not an MPEG variant after you said that any codec in MP4 has to be MPEG of some flavor. Then you run with that as if I'm saying everyone does this. That's a pretty common way of deflecting attention when someone points out a mistake. For the record, I'm not saying JPEG2000 in MP4 is often used. That was never the point. I'm just saying that you could use it, after you said you couldn't. You also say that H.265 in MP4 is "theoretically" possible. Well, it's more than theoretical. Have a look at this screen shot, taken from Resolve 19's deliver window. These are the codecs Resolve supports on MP4 export on the Mac. If you're going to answer people's questions, at least answer them correctly.

-

No it's not. As @Fabian Schreyer correctly stated, MP4 is a file format - a container. Within that container you can have a variety of codecs. MP4 files can contain H.264/AVC (MPEG4-part 10), a very specific variant of which Blu-ray uses, but there are plenty of ways to make H.264 files that are *not* the same as blu-ray's subset and will not work. These are used for a variety of players. MP4 can also contain H.265/HEVC (MPEG-H Part 2), and even ProRes (MPEG4-Part12/ISO Media) is MP4, among other codecs. In addition to the video codecs, it also supports a range of audio and text data (subtitles). This doesn't always work and is bad practice. It's also worth noting that the MP4 format is different on Mac, Windows, and Linux versions of Resolve. Some allow more features than others. Some allow for hardware acceleration of encoding, others do not. The end result is not identical, in our experience, so it requires a bit of experimenting.

-

Respectfully, Daniel - you don't know what you're talking about. You keep trying to compare motion picture film to what you know from still photo and while they are both film and there are some similarities, they are different in many ways and the workflows and software used to manipulate the files are completely different. The film stocks are different, and the methods of scanning them are different. You can't compare them.

-

Then instead of coming here (quite literally for years) to complain about the price of scanners, why don't you build one and find out how easy it is?

-

I've been in business for 25 years. "make it up in volume" is an illusion. More so when you're only selling a few hundred or maybe a few thousand of something at most, over many years. Lower the price and go out of business. You can't make money if you're selling for less than it costs to make. And "cost to make" includes R&D, inventory, manufacturing, tooling, salaries, shipping, marketing, office space, lawyers, accountants. You don't "spread that out over years" if you're a small company. You borrow money you have to repay, whether from investors, banks, or your own savings. Honestly, I don't know why I bother engaging with you. It's like talking to a 12 year old.

-

And they would promptly go out of business. What part of this are you not getting? An SLR and a machine vision camera aren't the same thing. You can't compare them.

-

I don't know how to respond to this because you're just jumbling up a bunch of terminology. Maybe read up on how log scans and data transfers work?

- 31 replies

-

you don't "set exposure" when doing a data scan. I suspect what you're doing is basically a one-light scan in the scanner, essentially grading the image post-capture in the scanner software. That's different, and is not how most scanners work. And that will definitely allow you to blow out highlights. It's just not how a data scan of film is done, but the FilmFabriek scanner probably doesn't work that way. It has nothing to do with SDR vs HDR scanning either.

- 31 replies

-

If this is how you're scanning film, you're doing it wrong. The exposure of the image on the film is irrelevant.

- 31 replies

-

Then there's a problem with either your scanner or your scanning methodology because this shouldn't happen. If the first shot is set up correctly and you're doing a flat scan, nothing should ever be over or underexposed. We pay for an annual support contract. The system is technically connected to the internet but we don't use it for anything other than scanning. It gets security updates only. We don't touch any other Windows updates on that machine unless necessary, because it works. I don't know what to say. We have half a dozen windows machines here and they're fine. I've been using Windows since the 1990s and while I don't especially like the operating system, it's not something I'd ever describe as "constantly breaking." We are a small shop - 3 of us. I do all the hardware and software maintenance myself. It's not that hard.

- 31 replies

-

- 1

-