Michael Rodin

Basic Member-

Posts

298 -

Joined

-

Last visited

Profile Information

-

Occupation

Cinematographer

-

Location

Moscow

-

My Gear

Cinealtas & Arri SR line

Recent Profile Visitors

-

SR3HS comes with 2 mags, a viewfinder extension, bridgeplate, 19 mm rods, MB16 mattebox, heated eyecup, ZV4 timecode/data splitter, Transvideo Rainbow monitor. IVS can be swapped for an HD video assist. As far as I remember, I had every ground glass except for 1:2,39 'scope - but who would shoot it on S16 anyway? No onboard batteries as it's powered with box/brick ones, but the swivel battery bracket is there. Messaged you with the price. There's also an SR1 with a PL mount, converted to S16, of course. Sold for significantly less.

-

I could sell you my SR3HS. It's got an IVS.

-

Meteop 5-1 17-69mm lens order

Michael Rodin replied to Brian Orme's topic in Lenses & Lens Accessories

-

Meteop 5-1 17-69mm lens order

Michael Rodin replied to Brian Orme's topic in Lenses & Lens Accessories

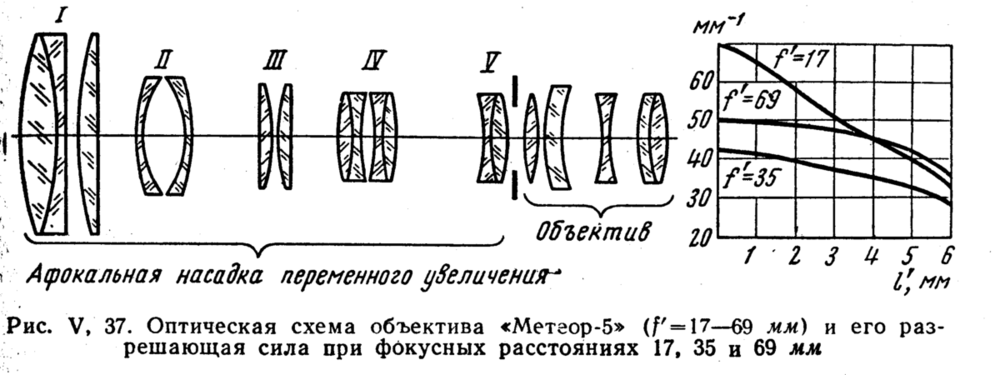

The Meteor 5 I see in the 1978 Volosov textbook is a little different with cemented doublet and a planoconvex in front. The 'main lens' is what focuses light on film, the other part is a small Galilean telescope of variable magnification. -

Studies done on soft light & inverse square law

Michael Rodin replied to Stephen Sanchez's topic in General Discussion

Etendue is a pretty fundamental thing, certainly not limited to imaging or focused light. You can think of it as 'spread' or 'divergence' of light. Or you could think of brightness as a 'conversion multiplier' to get luminous flux of a beam with a given etendue. It's ddФ = L*ddG for any point emitting or receiving light. If we integrate it over the directions of rays (to get dФ, full flux from a single point) and then over the surface of light source/receiver, we'll get flux Ф, which's basically power in either watts or lumens (for visible spectrum). Since it's obviously dW/dt, W for energy, in a closed system it's conserved. As long as no diffuse reflection or scattering is involved, we can assume a beam of light is kind of an 'isolated volume' (that's very imprecise language, so don't take it as a legitimate definition) where flux is 'confined'. And a pretty remarkable fact of geometric (or maybe actually Hamiltonian) optics is that etendue is conserved too. This means, even brightness L is conserved and is thus the same for an object and image point! For practical lighting calculations, the general ddФ = L*cos(normal angle)*d(area)*d(spread) formula plus the conservation laws give us a multitude of useful physical quantities (that are derivatives of Ф) and formulae such as Lambert's. E.g. irradiance of a point dE = ddФ/dS = L*cos(norm.ang.)*d(spread) - and illuminance is the same, but for the receiving side. For a Lambertian source, L=const - it's equally bright from any angle. And yes, a diffuse bounce is close to Lambertian. But cosine is still there and it means that from a sharp angle the apparent area of a source is 1/cos(norm.ang) times smaller. That's why rays coming from the edges on your sketch contribute less to the illumination than the center rays. No need for a concept of collimation here, it'll solely confuse. Actually, a perfectly collimated beam (which's impossible) has no falloff since its area is constant. Feel free to have a chat. It's not easy to find the right read when you've just started out with the topic. So don't wait till you consider yourself educated - it'll sure be a long trip down the rabbit hole ? -

Studies done on soft light & inverse square law

Michael Rodin replied to Stephen Sanchez's topic in General Discussion

https://en.wikipedia.org/wiki/Etendue to get one started. As a quick rough guess, to get illuminance E(L) at distance L you'd have to integrate Intensity(r)*cos(angle with the normal)*dx/(r+L)^2 over r, which's a radius of a large light source. Then you can make some series expansions that will show E(L) is close to inverse square law and approaches it at big enough L/r. It's simple like this if every point of the large source emits a wide enough (and uniform over angle) cone of light that its rays reach the observer at any distance. The ideal case is a lambertian source - a frame of thick diffusion is pretty close to it. If you move far enough from a spotlight (there's a so called beam forming distance - not sure what's the correct English term), you become illuminated by rays coming from all parts of its lens' aperture and then the illuminance starts to decrease with the square of distance (no wonder as the lit area increases with the square of it - the whole inverse square thing comes from any surface being proportional to squared linear dimension - and conservation law, of course). Close to the (quasi-)collimated light source (less than around D/tan(beam spread/2)) you won't observe an inverse-square relationship as the 'apparent lens aperture' will be widening. -

Lamp power is P = U*I = U^2/R. Resistance of a wire is R = 4*r*L/(pi*D), where L is length, D is diameter. Resistivity r (in Ohm-meters) depends not only on the alloy, but on the temperature as well, which complicates things a lot.

-

optical science looking for books about optics

Michael Rodin replied to Dmitrii Sinitsyn's topic in Lenses & Lens Accessories

Добро пожаловать, Дмитрий! I'll name mostly Russian books. Topics 1-4 and 6 are all dealt with in paraxial optics that are covered in every book on lens design and geometrical optics. You'd look in broad all-round textbooks for the basic stuff: Заказнов, Теория оптических систем. Русинов, Техническая оптика Malacara brothers, Handbook of Optical Design Smith, Modern Optical Engineering Mouroulis, Macdonald, Geometrical Optics and Optical Design Topics 11, 12 and 14 are represented in lens design/synthesis books where merits of different lens formulae are discussed: Kingslake, Optical System Design 'Monographs in Applied Optics' series: Zoom Lenses by Clark etc - and the textbooks mentioned previously mention formulae too. There are books on aberration analysis: Welford, Aberrations of Optical Systems. A classic on diffraction: Marechal, Structure des images / Структура оптического изображения. and - Steward, Fourier Optics: An Introduction. Then there are books that discuss the methodology of lens design and different approaches to correcting aberrations: Русинов, Композиция оптических систем, which's nothing short of brilliant; Laikin, Modern Lens Design Shannon, The Art and Science of Optical Design Волосов, Фотографическая оптика. The latter has a chapter on anamorphics. These books help one understand how lens designers have come up with the formulae we know and how you'd modify some basic design to get the desired paraxial parameters and image quality. There's a peculiar and rather unique book on the junction of optics and mechanics that gives an interesting perspective on topic 13: Заказнов, Специальные вопросы расчета и изготовления оптических систем There's a book outlining an elegant and detailed theory of how the image is formed and processed - essentially, how light turns into digital data. This book is heavier on math though, a bit of functional and Fourier analysis are involved. Мосягин, Немтинов, Лебедев - Теория оптико-электронных систем. -

So underexposure is the thing now, hey

Michael Rodin replied to Karim D. Ghantous's topic in General Discussion

A thin negative per se is nothing new of course, but the latest fad is underexposure plus low contrast, or "low-con low key" (which already sounds absurd). I'd guess it's a byproduct of soft lighting everything - when there's a small tonal scale and spill everywhere, you're tempted not only to remove fill, but also to bring the key down too much when you're shooting night. Thus only the specular highlights remain in "plus" zones over gray. While there is place for murky images (you may want the subject to kind of gradually come out of darkness instead of showing a clear silhouette and features), the extreme examples are usually pure failure. Some are so afraid of making the scene look lit that they virtually end up only filling & accentuating natural light, some are reluctant to rig lights far and high because of mad schedules, no rehearsals, constant re-blocking of the scene, etc. It's not always an artistic intention, it's often lighting being trash for obvious reasons. -

1. Yes, a B&W image is technically a little different. On color film, image is formed by dyes that are released when the developer is oxidized by exposed silver grains. So it is on some more recent B&W stocks like Ilford XP2. But we tend to use older stocks where the image you see is actually made of sharp "cubical" silver grains. While these emulsions are technically inferior, you'd likely prefer their edge effects and grain as it provides a sort of "dithering" to image, fooling the eye into seeing texture and sharp contours that are actually missing. 2. You're either shooting color or B&W. It has to be decided on early in preproduction. Everything from production design to wardrobe, makeup and hair depends on whether you shoot color or not. While desaturating in post allows you to fine-tune contrast with hue-vs-luma curves, more general contrast control can be also accomplished with color filters and colored lighting.

-

Mid-range lens versus Top tier lens?

Michael Rodin replied to Max Field's topic in Lenses & Lens Accessories

It's much harder to produce a decent image on a 3-chip 2/3" imager than on the 35 mm format. FFD is huge in proportion to typical focal lengths (48 mm vs a moderately wide lens of 10 mm), and you need a rather radical retrofocus design to project an image that far from the last surface. Correcting distortion is a real challenge with retrofocus formulae, and pretty much every aspect of engineering these lenses is harder compared to symmetrical designs - thermal, tolerances, etc. These designs are particularly sensitive to tilt and decentering of elements, which means more time spent tuning them at the factory and more effort at the design/optimization phase to find a formula that allows for looser tolerances. And despite all the difficulties, Digiprimes are among the best performing camera lenses - they're virtually diffraction limited and apochromatic. They're expensive to design, expensive to manufacture, probably require quite a lot of manual labor to make - I doubt Zeiss made a big profit selling them. Compact primes likely reuse a lot of tooling and share parts with stills lenses that are cheap at least because of volumes. The designs are conservative, tolerances are looser, elements are fewer. -

Digiprime Adapter Bloom Mystery

Michael Rodin replied to Max Field's topic in Lenses & Lens Accessories

In converging rays, a prism will introduce spherical aberration and astigmatism as well.The larger your aperture is, the wider is the cone of rays pointing to the pixel and the steeper is the angle they converge at. Since spherical grows rapidly with aperture (not linearly, but rather a bit faster than (aperture diameter)^3), visually it kind of unexpectedly kicks in after being very mild in the lower range of apertures. -

How to strike a 5k tungsten fresnel

Michael Rodin replied to Bradley Mowell's topic in Lighting for Film & Video

The lamp will last much (couple orders of magnitude) longer if powered on with a dimmer. Quartz lamps, no matter the wattage, practically never die from age - it's inrush current and shock/vibrations that break them. Abruptly turning a larger light off also causes arcing in the switch - hence there are no mechanical switches on the 20K. -

is Intellytech good investments?

Michael Rodin replied to John Hovhanes's topic in Lighting for Film & Video

DeSisti fresnels and parlights, LTM Cinepars, LTM Prolight fresnels, open faces by Rolf Bloessl made under the brand of Cine-Mobil rental. With Arri you pay premium for 'Arri' letters. There are also riskier variants like "Юпитер МГЛ". In any case, consult with a gaffer before buying. -

is Intellytech good investments?

Michael Rodin replied to John Hovhanes's topic in Lighting for Film & Video

Speaking about bi-color - does it actually save time? I don't find myself constantly readjusting CT - at most, I'd change gel once after looking in the monitor. Neither do I understand the need for battery power here. LEDs, with few exceptions, don't have the output to be used on EXT, even on a cloudy day or with an overhead silk you need at least a 200W HMI parlight to do something with contrast - and a 200W can be battery powered too. At 100 lm/W it's just as efficient - and much, much more efficient optically. In an interior, you'll have a couple of 16A circuits in newer buildings and 10A in Soviet ones. And when you don't - there are rentals lining up to give you a truckload of battery-powered lights for a dime. Owning more batteries than you need on day-to-day jobs is a waste of money - they quickly lose their resale value, and heavily used ones cost nothing. By the way, you budget is just enough for batteries only if you feel like going that route. As to lamps - I can still get HMI200/GS, which's been discontinued for 20 years or so, and not as widespread as T12 and T7 CFL Kinoflo lamps that are used outside film industry as well. There are things to avoid - sealed beam and 250W HMI etc - but they're all rarity nowadays.