-

Posts

146 -

Joined

-

Last visited

-

How to shoot extreme close ups

Daniel Klockenkemper replied to kris limbach's topic in Lenses & Lens Accessories

Do you have a point you are trying to make? I address depth of field at several points in my post - at macro distances, depth of field is extremely shallow regardless of sensor size. Focal length equivalence debates are a dead horse at this point, and doing so would derail the topic considerably. -

How to shoot extreme close ups

Daniel Klockenkemper replied to kris limbach's topic in Lenses & Lens Accessories

Four thirds sensor, hence the tighter angle of view. -

How to shoot extreme close ups

Daniel Klockenkemper replied to kris limbach's topic in Lenses & Lens Accessories

I'm surprised more people have not come out of the woodwork to post their eyeball macro shots. Here's one of mine - I think this was about 40 cm working distance with a 90mm macro, equivalent to about 140mm lens on Super 35: Compared to Daniel J. Fox's example, the 'topology' of the face is less exaggerated because of the increased working distance. "Italian" ECU shots in Leone western films were probably achieved at 250mm and 1.7 meter distance (around the close focus of the Angenieux 10-1 zoom), and the facial topology is much more flattened there - also visually striking, but different. There is no strictly right or wrong approach, but you should keep in mind both the storytelling and the practical considerations. How much proximity is appropriate for that character, at that moment in the story? Is the moment intense and psychological, or observational? If you get extremely close, what would the exaggeration of the facial structure imply about the character being photographed? There are plenty of practical considerations if you put the lens extremely close to the actor, no matter if it is by diopter, macro lens, or extension tube: Even tiny movements by the actor become giant when magnified to cinema screen size. It helps to give the actor something to lean their head against, or to have them put their head against a wall It can be an uncomfortable, unpleasant experience for some actors to have the camera apparatus, metal rods, etc. so close to their face, especially if their ability to move is limited You will see reflections in the eyeball. If possible, get a larger monitor for video village to check what is in the picture, and be prepared to move equipment, crew, etc. Do your lights blend into the scene if they appear on camera? If the lens is extremely close, the depth of field will become razor shallow - which eyelash do you want in focus? (My calculator says 90mm at f/8, 40cm focus distance has 2 mm depth of field; wide open is less than 1 mm. Your calculations may vary based on your chosen circle of confusion) At extremely close distances, the lens itself can literally overshadow the subject, making it a challenge to light You, and your focus puller, will have a much easier time if there is room to light for a deeper stop, and slightly more depth of field in general from using a longer focal length at a slightly increased distance. There is no need to be dogmatic about the aperture at macro distances - depth of field will be shallow regardless. If you decide on the "Italian" style and have a more typical focus distance, then your standard photographic approach can apply -

I caught a screening a couple nights ago. The lighting was very well done as others have noted, but I also felt that the camera movements were exceptionally well conceived and executed - often seamlessly changing from motivated to unmotivated (and sometimes back to motivated, and even unmotivated again). Can you comment about how these were planned and realized? Also, I noticed that in shallow-focus situations your focus puller went for the subject's far eye most of the time. Is this a general/personal preference, or a particular decision for this project?

-

For sale is the FX9 kit below. Great condition and low operating hours. I shifted career paths after purchase, so it has not seen much use. Sony FX9 camera (with viewfinder, loupe, hand grip, mic mount, original top handle) Arri VCT shoulder base plate + LAS-1 lens adapter support Panasonic SHAN-TM700 VCT quick release tripod plate Wooden Camera top plate + NATO top handle Wooden Camera V-mount battery slide Smallrig flat rear insert plate 2 Hawk-Woods BP-98UX batteries with D-tap 1 Sony BP-U60 battery, 1 Sony BP-U35 battery Dolgin TC-200EX dual BPU battery charger Sony AC power adapter 3 256GB + 1 64GB Sony XQD cards Sony MRW-G1 card reader Price 9000€ plus VAT. Located in Helsinki, Finland. Shipping at buyer's expense, local pickup preferred. Birds not included. I am happy to send more pictures on request, featuring the same birds so buyers can be sure the pictures are of the same package.

-

Panning around the Entrance Pupil (Nodal Point)

Daniel Klockenkemper replied to silvan schnelli's topic in General Discussion

In practice I doubt it's common at all. The vast majority of camera support devices are balanced relative to the camera's center of gravity, which puts the nodal point of the lens forward of the pan axis. Renting specialty equipment to balance the camera around the nodal point is not only an additional monetary cost, it also requires extra time to configure and re-configure when there are lens changes, etc. It might create significant extra difficulties for certain camera operating styles (e.g. handheld). When not strictly needed for VFX or technical purposes, I doubt many productions would feel the costs are justified. To what degree nodal pan and tilt movements contribute to "naturalism" in storytelling is a separate and more subjective topic. Certainly, human eyes rotate very closely to their nodal points; however, when people turn their entire heads the rotation is centered around the neck/spine, which puts the nodal points of the eyes far forward, similar to common camera supports. I also feel that most of the time, camera movements are intentionally performed in order to change the perspective, so a minor offset in parallax would be seen as a benefit rather than a drawback, if it is even considered at all.- 2 replies

-

- 2

-

-

- entrance pupil

- movement

-

(and 1 more)

Tagged with:

-

The simple answer is that they don't. For the Honda EU6500 or 7000 generators, the model number represents the possible momentary peak load. The maximum sustained load is 5500W/45.8A according to the spec sheet, but you should still allocate some some headroom for good measure. The modified generators I've seen have 50A circuit breakers on them. 60A is just the next size up for the cable/connector, which does help to ensure that the cable is less likely to be a limiting factor.

-

Light to simulate hard sunlight

Daniel Klockenkemper replied to Sairaj Batale's topic in Lighting for Film & Video

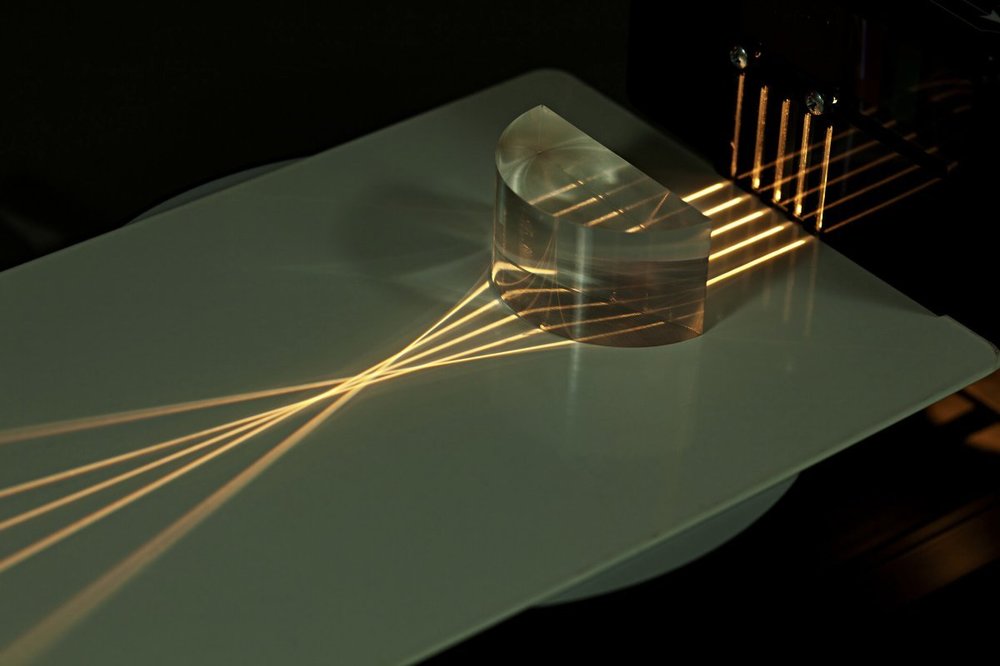

This is how it's explained in the Set Lighting Technician's Handbook section on fresnels: A fresnel lens is an approximation of a single/simple plano-convex lens, and it's a bit easier to find ray diagrams of those on the internet. I attached a picture I found, from Firebird Optics' "Introduction to the Plano-Convex Lens" (https://www.firebirdoptics.com/blog/intro-to-the-plano-convex-lens), which shows how the light rays of a PCX lens don't converge evenly. -

PL Lens Recommendation Sought

Daniel Klockenkemper replied to Arnold Finkelstein's topic in Lenses & Lens Accessories

As rehoused wide stills zoom lenses go, I think it'd be hard to find better mechanical quality than the Sigma or Tokina (without going to a bespoke rehousing service like TLS). If either of those lenses meets your needs, I wouldn't necessarily hold their stills origins against them. There aren't too many alternatives for sub-$10k wide cinema zooms... Duclos Lenses sells low-budget wide-angle zooms from Zunow and DZO, but I tend to think that you get what you pay for. Have you considered the Canon CN-E 15.5-47mm? On the used market they'll still stretch your budget a fair bit past the Sigma, but it's a proper cinema lens of excellent build and optical quality, and it might be the best overall value in that category. Its range would also make useful as more than just a wide variable prime like the Tokina or Sigma tend to be. -

cine lens crop factor

Daniel Klockenkemper replied to Deniz Zagra's topic in Lenses & Lens Accessories

This topic again... ? Lenses do not have crop factors. Period. The focal length of a lens is always what it says it is. It does not matter if the lens does or does not have the ability to cover a particular sensor or piece of film completely. A lens cannot become a different lens when placed in front of a different format; that would violate the laws of physics. "Crop factor" only relates to the size of one format compared to another format. The ratio between two formats is always the same, even with no lens involved. The combination: format ⋆ focal length ⇒ angle of view. Which means: If you keep the format the same and change the lens, you get a different angle of view. If you keep the lens the same and change the format, you get a different angle of view. So to get around to your question... ? A S35-sensor camera might be paired with a typical set of focal lengths, such as: 16mm, 24mm, 35mm, 50mm, 85mm. To choose lenses for a full frame camera that have the same angle of view as the above set, you'd multiply by the 1.4x crop factor - the difference between the two formats - which would tell you to pick these focal lengths: 22.4mm, 33.6mm, 49mm, 70mm, 119mm. But in practice this would probably get rounded to more common numbers: 24mm, 35mm, 50mm, 75mm (or 85mm), 135mm. There are quite a few "Super 35" lenses that can cover a full frame sensor. And an excellent set of "full frame" lenses will work just fine on Super 35 formats, too. So start by determining what angles of view are needed for the particular story or style, then choose a format, and pick the lenses from there! -

Hi Edith, I did a fair amount of operating with the Moviecam Compact, which is quite similar to the Arricam ST, and have used the LT a bit as well. I'd agree with your assessment - neither is a mirrorless digital camera, so in the grand scheme of things they're not dissimilar. All film cameras are heavier on the shoulder, so do weight training, wear a back brace, all the usual precautions for the physical aspect. Both LT and ST will require a proper high-end tripod and head, so there's no practical difference there either. Unless it was absolutely necessary to shave every ounce for some reason, I'd probably prefer to have the ST. It's entirely possible to have a lighter ST build with a prime lens and 400ft magazine. Productions that demand the LT often end up bulding it up with 1000ft magazine, studio zoom, etc. anyway and it loses whatever advantage it had over the ST. I'd rather have the flexibility to mount the magazine on top and a little more room on the body, both inside and outside. And the ST's increased mass should help it run a little more quietly, too!

- 5 replies

-

- arricam st

- arricamlt

-

(and 1 more)

Tagged with:

-

Name the flare

Daniel Klockenkemper replied to Nikolas Moldenhauer's topic in Lenses & Lens Accessories

On a film camera (like your example) this is a reflection off of the side of the film gate, which is usually a shiny metal. I've simply heard it called gate flare, but there could be some more official name that I don't know of. Interestingly, I've seen similar flares on mirrorless digital cameras from low-quality lens adapters that lack proper internal light traps or flocking. If there's not a broader name for it, perhaps we need one? Mechanical flaring, perhaps? -

Marking exposed film cans

Daniel Klockenkemper replied to Daniel D. Teoli Jr.'s topic in Film Stocks & Processing

I'd suppose that "EM. NO." is short for emulsion number. -

Name of this connector?

Daniel Klockenkemper replied to Raymond Zrike's topic in Camera Operating & Gear

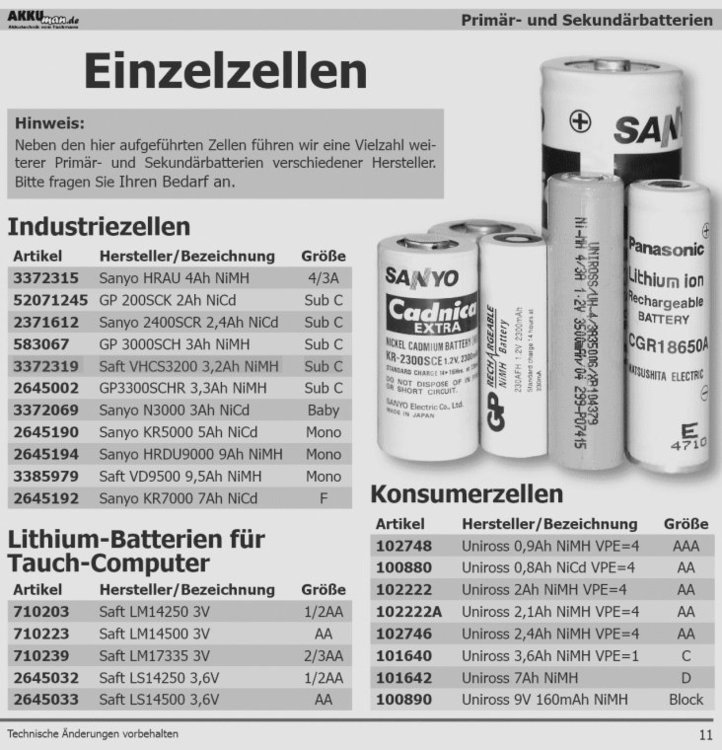

Through some searching, I found an old Akkuman catalog; the cells are: 3372319 Saft VHCS3200 3,2Ah NiMH Sub C. Normally I would say that cells above 3000mah should have no trouble running the camera at high speed. However, given that the pack's manufacture date is June 2005, the cells' capacity are likely much reduced at this point. I would consider having the pack re-celled. Sub-C NiMH cells can be found with capacities up to 5000mah, but higher capacity cells often also have more pronounced self discharge. When I owned an ACL, I never needed more than 12V - the motor could quickly reach 75fps with high-amp NiMH cells. I would advise against using a V-mount or lithium chemistry battery without a voltage regulator. The circuit board image you posted does look similar to a voltage regulator, with the small screw on the blue component probably being for voltage adjustment. "VCLX" is a model of block battery made by Anton Bauer that has a 14.4V 4-pin XLR output, which makes the regulator theory very likely. There are D-tap to 4-pin XLR cables on the market that could make it possible to use a V-mount with the regulated cable. You should test the cable's output with a multimeter before connecting it to the camera. I would highly recommend having a professional, or a friend who is savvy with electronics, check things out for you! -

Looking for Cinefade (Vari ND)

Daniel Klockenkemper replied to Shi-Hyoung Jeon's topic in Cine Marketplace

The Sony cameras' eND do take a small amount of time to adjust, even at the fastest setting. I think if the iris ramp were done over the course of about 3 seconds (or longer), the eND would be able to keep up and the effect would be seamless. If the desired effect is for the depth of field to change as fast as possible, a motorized setup would likely be faster - you'd only be limited by the speed of the motors. Iris rings and dual-pola variable NDs don't have to turn very far to make exposure changes. I've used the Sony eND in a few scenarios when the exposure changes dramatically, e.g. a garage door opens and sunlight pours in, or the lights come on unexpectedly in a dark room. In the former situation, the change was gradual so the effect was seamless. In the latter, since the lights coming on was a surprise to the characters, it made narrative sense that it would take a second for their eyes to adjust, so I lived with the very brief overexposure; the auto adjustment is smooth and otherwise doesn't call attention to itself.