-

Posts

525 -

Joined

-

Last visited

Everything posted by AJ Young

-

Pitching and Pre-Production: Tools and Methods

AJ Young replied to Trent Watts's topic in Business Practices & Producing

I use a google doc all the time to convey a look to a director. (I used to use Word .doc's exclusively, but adopted Google docs instead because of their ease of access) PDF's are an excellent way to share a clean version of a look book to a director/producer. Plus the hyperlinks in the pdf should still work. Honestly, if you're still pitching yourself to the production, I wouldn't dive too much into specifics beyond a simple pitch deck/book/presentation. In my experience, they're judging you more on your prior work and recommendations rather than what you can visually bring to the project. I'll say, if you've got the director onboard to bring you on, then you'll most likely get the job. When you actually get hired is when you can really dive deep into the look book. It can be as specific as scene by scene, location by location, or just an overall look per act/sequence. It's always different for each film and how each director works. I can email you some examples of the look books I've made! Drop me a line: AJYoung.DP@gmail.com -

Where to find high-quality commercial stills

AJ Young replied to Bradley Credit's topic in General Discussion

I'd say sign up and see what happens! Beta testing is testing. ? -

Where to find high-quality commercial stills

AJ Young replied to Bradley Credit's topic in General Discussion

Hmm, that's a good question. I don't know of any website that has a collection of those images. Try looking at Shotdeck: https://shotdeck.com/ -

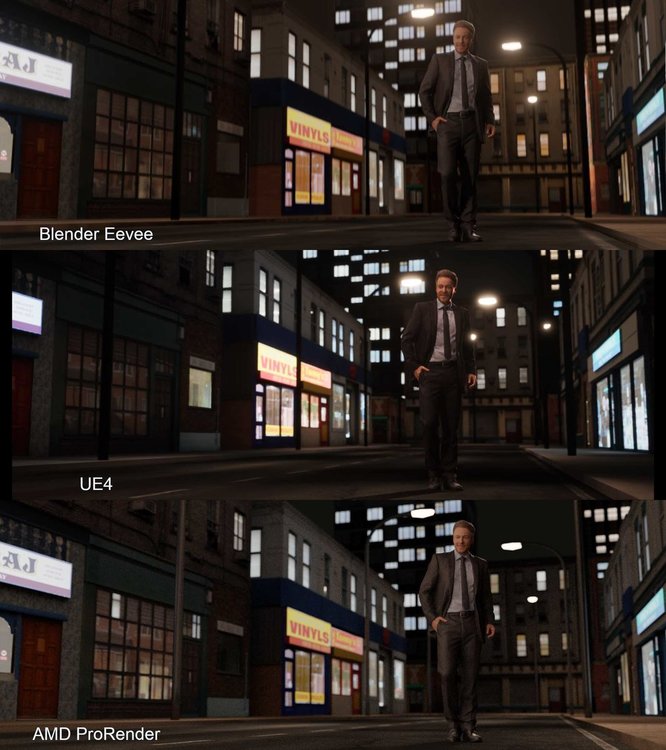

AMD ProRender is a physically based renderer that only uses ray-tracing! ? It doesn't do real time, but from what I've learned neither does Vray, Arnold, nor Renderman.

- 23 replies

-

- virtual production

- unreal engine

-

(and 3 more)

Tagged with:

-

Correction: After further research, I discovered that Eevee can only run on the GPU (according to Blender's documentation). Eevee is in fact running on my GPU on my laptop, but it is running on the display GPU (Intel UHD Graphics 630 1.5GB). My MacBook Pro has 2 GPU's, one for processing graphics and one processing displays. Regardless, impressive.

- 23 replies

-

- virtual production

- unreal engine

-

(and 3 more)

Tagged with:

-

Correct way to mount a heavy lens

AJ Young replied to Dominik Bauch's topic in Camera Operating & Gear

I've also seen people mush the lens support up too much! ? -

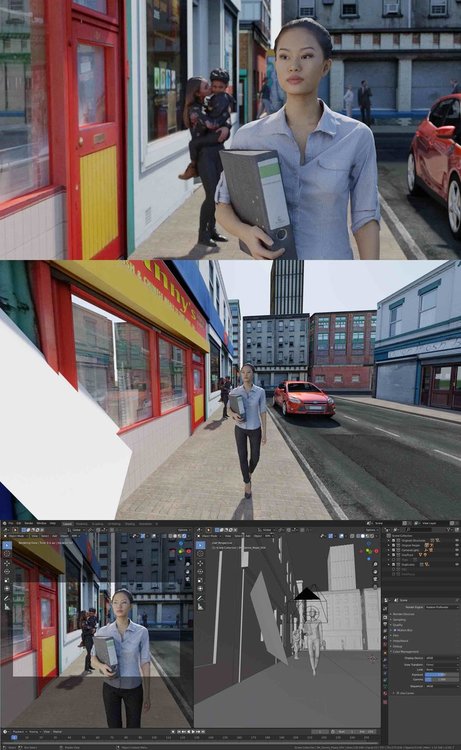

Since the industry shutdown all of my live action work, I decided to learn a new skill set and dived deep into the world of virtual production. I said it in college and still believe it today that narrative film will increasingly move towards virtual production. Big budget films already utilize a ton of CG environments and the Mandalorian showed us how incredible real time rendering on an LED wall can be. As a primer, I found this doc from Epic games gives a fantastic intro to virtual production: https://cdn2.unrealengine.com/Unreal+Engine%2Fvpfieldguide%2FVP-Field-Guide-V1.2.02-5d28ccec9909ff626e42c619bcbe8ed2bf83138d.pdf Matt Workman has been documenting his journey with indie virtual production on YouTube and it's becoming clear how easy it is for even the smallest of budgets to utilize real time rendering. I wouldn't be surprised to see sound stages or studios opening up that offer LED volumes and pre-made virtual sets at an affordable price for even micro budget features. Here's what I did so far: Attached are renders from three engines: Blender's Eevee, Unreal Engine 4, and AMD ProRender. (please excuse the heavy JPG compression, shoot me a DM for an uncompressed version) I made the building meshes from scratch using textures from OpenGameArt.org and following Ian Hubert's lazy building tutorials. The man is a 3D photoscan from Render People. All of these assets were free. Lighting wise, I was more focused on getting a realistic image over a stylized one. Personally, the scene is too bright and the emission materials on the buildings need more nuance. Blender's Eevee: Honestly, I'm beyond impressed with Eevee. It's incredibly fast. For those who don't know, Eevee is Blender's real time render engine. It's what Blender uses for rendering the viewport, but it's also designed to be a rendering engine in its own right. Most of Eevee's shaders and lights seamlessly transfer over to Blender's Cycles (their PBR engine). UE4: After building and texturing the meshes in Blender, I imported them into UE4 via .fbx. There was a bit of a learning curve and manual adjustments for the materials, but ultimately I was able to rebuild the scene. The only hitch were the street lamps. In Blender, I replicated the street lamps using an array modifier which duplicated the meshes and lights. The array modifier doesn't carry over the lights into UE4 via the .fbx, so I had to import a single street lamp and build a blueprint in UE4 that combined the mesh and light. In the attached image, the street lamps aren't in the same place in UE4 because I was approximating. My next step is to find a streamlined way to import/export meshes, materials, camera, and lights between Blender and UE4. I believe some python is in order! As expected, UE4 looks great! AMD ProRender: Blender's PBR engine, Cycles, is great. However, it only works with CUDA and OpenCL. I currently only have a late 2019 MacBook Pro 16". It's GPU is the AMD Radeon Pro 5500M 8GB. Newer Apple computers only use the Metal API, which Blender currently has no support for. Luckily, AMD has their own render engine called ProRender. Needless today, the results are great and incredibly accurate. This engine isn't a real time. Render time for the AMD shot was 9 minutes. This render is definitely too bright and needs minutiae everywhere. My final thoughts: Even though this is a group for Unreal, I'm astonished by Eevee, particularly how incredibly fast it is for running only on my CPU. (Again, Blender has no support for Apple Metal, so it defaults rendering to the CPU on my laptop) The next iteration of Blender will be utilizing OpenXR. According to the Blender Foundation, they'll slowly be integrating VR functionality into Blender and version 2.83 will allow for viewing scenes in VR. With that in mind, I'm definitely going to experiment with virtual production inside Blender. As for Metal support, I believe Blender will be moving from OpenCL/GL to Vulkan in the near future. From what I've found, it's easy to translate from Vulkan to Metal. (This part is a bit above my head as a DP, so I'll just have to wait or use a Windows machine) Does anyone have any useful guides that have a streamlined process for moving things between Blender/UE4? I'm from live action and love how easy it is to bring footage from Premiere/FCPX to DaVinci and back via XML. Is there something similar or is .fbx the only way?

- 23 replies

-

- 2

-

-

- virtual production

- unreal engine

-

(and 3 more)

Tagged with:

-

I think it produces some good images, at least from what I've seen online. My only concern is servicing the camera, like what Matt Allard pointed out in the News Shooter article. Convincing a producer to use it could be a hurdle, but not a big one.

- 9 replies

-

- kinefinity

- 8k

-

(and 1 more)

Tagged with:

-

https://deadline.com/2020/06/hollywood-reopening-white-paper-unions-studios-producers-read-it-here-1202948491/ Per Deadline: The full report from The Alliance of Motion Picture and Television Producers (AMPTP) can be found here: https://pmcdeadline2.files.wordpress.com/2020/06/iwlmsc-task-force-white-paper-6-1-20.pdf This report was put together by AMPTP and the major industry unions including SAG, IATSE, and the DGA.

-

Source 4 Ellipsoidal on 1200w HMI?

AJ Young replied to David Grauberger's topic in Lighting for Film & Video

It's incredibly bright! I used one for a beauty commercial where we needed a hard key light from above camera for 240fps. I believe it took 45-60 minutes for the unit to cool down for disassembly. -

I haven't yet, mostly because it doesn't support Ray Tracing on a Mac (which is the only computer I have)

-

-

Of course, have both! ? I wouldn't degrade the image too bad for the common denominator monitor, just to make sure that my look is good enough for a terrible TV.

-

Eyes, meter, and waveform! ?

-

Wild pitch, but I also believe you should have a "common denominator" monitor: a simple TV from an electronics store set to factory settings. It's obviously the right idea to color grade with actual monitors for color grading (like the ones everyone has mentioned), but it's also good practice to see what your grade looks like on the mediums/formats your audience will be viewing your film on. This avoids the problem Game of Thrones had with their major night battle on their last scene (news article). Even though we trust our audience will view our project in the best conditions possible, we'd be lying to ourselves. Most people have their TV's set to factory settings, are watching your movie with sun light blasting through windows, or are consuming the film on their iPhone while riding the subway. Having a "common denominator" monitor gives you perspective into how your project will look on the crappiest of settings which you can then account for.

-

Bouncing or Shooting through unbleached muslin:

AJ Young replied to omar robles's topic in Lighting for Film & Video

@Phil Rhodes: That looks cool. Which brand is it? @omar robles: Generally, the thicker a diffusion is, the more it bounces rather than transmits in my experience. Like what Stuart said, it's not impossible to shoot through something as thick as muslin, but it requires a lot more punch because the thickness of the material itself is either absorbing or bouncing the light. You can even use foam core as diffusion if you want! I experimented with it in college by shooting a Maxi-brute through a 4x4 piece of foam core (the kind used for school presentations): The thicker the diffusion you use, the more it warms the light. I had to put a cocktail of CTB and Minus Green gels to get it back to tungsten. Thankfully the school had a color meter I could use! Would I do it outside of an experiment? Most likely not. I'll just bounce off the foam core with a lower wattage light. ? -

I wouldn't be surprised if they used negative fill, actually. I did it on this short with a similar looking shot:

-

I'm joining @Gregory Irwin in not being sarcastic, but the Robocup is ubiquitous with camera department: https://www.amazon.com/ROBOCUP-Colors-Fishing-Wheelchair-Microphone/dp/B0065MYZGW/ref=sr_1_1?dchild=1&keywords=robocup&qid=1590510334&sr=8-1 Seriously, you'll see it on every pulling station. Hell, I use it at home on my desk!

-

What's your budget? Good matteboxes and filters aren't cheap, but they will last for at least a decade if you treat them right. The ARRI LMB-15 or LMB-5 is one of my favorites. Lightweight and easy to adapt to your specific lens diameter. It can be a 2-stage or 3-stage mattebox. It has a modular design, so changing the diameter of the clamp on or stage depth is easy even on set. https://www.bhphotovideo.com/c/product/1341053-REG/arri_kk_0015176_lmb_4x5_15mm_lightweight.html I've been quite impressed by Bright Tangerine's Misfit matteboxes and I've read that the Mistfit Kick mattebox is stellar (LINK). It's also the most affordable of matteboxes, especially compared to ARRI, Oconnor, etc. https://www.brighttangerine.com/ Filter wise, avoid Formatt and Firecrest. They've got a green cast to them and I'm not happy with the results. Schneider and Tiffen are fantastic.

-

I recently saw on my social media feed (LinkedIn) that someone posted a photo of a crew returning to work: I applaud the crew for wearing PPE, but the boom op uncovered his nose thus breaching protocol and putting the cast/crew at risk. I'm not sure where this production is, but I would assume the US because it's how I discovered it on my feed. This photo illustrates how difficult it will be to return to work as "normal" because an innocent mistake jeopardizes everyone's safety.

-

My brain hurts trying to figure this out

AJ Young replied to Matthew J. Walker's topic in Visual Effects Cinematography

My guess: Tungsten film, CTO gelled key light, shot at blue hour. The color of the city lights would still a slightly warmer even in tungsten because not every light in a city would be perfectly 3200k. -

Green Screen Shoot in P-51 Mustang Cockpit

AJ Young replied to Grant Hobbs's topic in Visual Effects Cinematography

Incredible! Nicely done! -

How do you store Grip & Rigging Equipment?

AJ Young replied to Sam Bignell's topic in Grip & Rigging

I mean, milk crates are pretty standard. Maybe ask your local grocery store? Sometimes they're just throwing them away. ? -

Thank you! I chose PHP because I wanted to have one file for header/site menu, one file for footer, and one file for the actual content. PHP then puts all three of those files into one and displays that. I found it easier to edit one header/site menu file and have that change applied across the website instantly rather than editing each individual .html file. Here's what the PHP is doing: <?php $path = $_SERVER['DOCUMENT_ROOT']; $path .= "/header.php"; require_once($path); ?> <!--Begin Content Section--> HTML HTML HTML HTML <!--End Content Section--> <?php $path = $_SERVER['DOCUMENT_ROOT']; $path .= "/footer.php"; require_once($path); ?>