-

Posts

444 -

Joined

-

Last visited

Everything posted by Mei Lewis

-

Maybe not how it was done in these examples, but it's easy to do in post, just use the lens distortion correction tool in the wrong direction.

-

Best White cyc Lighting strategies?

Mei Lewis replied to Dominik Bauch's topic in Lighting for Film & Video

Best option is to use a studio with built in cyc/infinity backdrop. If not that then David's suggestion of a white paper roll is easily the best option, especially if you need a full length shot. Lighting any type of sheet, either from the front or back, and getting it even, with no seams, but not overexposed to the point of causing contrast reducing flare, is very tricky. The smooth, flat, matt surface of paper is much easier to light evenly. -

I really liked this film too.

-

Why Are Roger Deakin's Waveforms Better than Mine?

Mei Lewis replied to Richard Swearinger's topic in General Discussion

I really think there's something in this. I don't think cinematographers are using waveforms to judge composition, or even very much to judge about lighting, other than exposure levels. But by 'measuring' the images with a waveform monitor, some of their structure makes itself apparent. (That's true if we're looking at images from the camera or final shots as they appear in the film). The waveform is showing something about the geometry of an image, and often images that people like from a cinematography standpoint are very clear and simple, the viewer knows exactly how to interpret what's going on. Look at this list: https://www.buzzfeed.com/danieldalton/there-will-be-scrolling?utm_term=.umdYd0gv5#.drmeVqQmp (I'm not saying I agree with the list, but they are the sort of shots many people like). Most of them are very simple and could be sketched without too many pencil lines. -

Why are you showing "Low-con encoded video", "<<ITU709 video>>" and "ITU709 display" as having the same range? Aren't "Low-con encoded video" and "<<ITU709 video>>" just data, which doesn't have a range? (it's inside a computer).

-

I think you _can_ talk about the number of stops a display has, it's possible to measure it as the difference between its black and white levels using a light meter. But this range doesn't really have much to do with the range of the camera or the original scene, and I think that's where Jan is having difficulty.

-

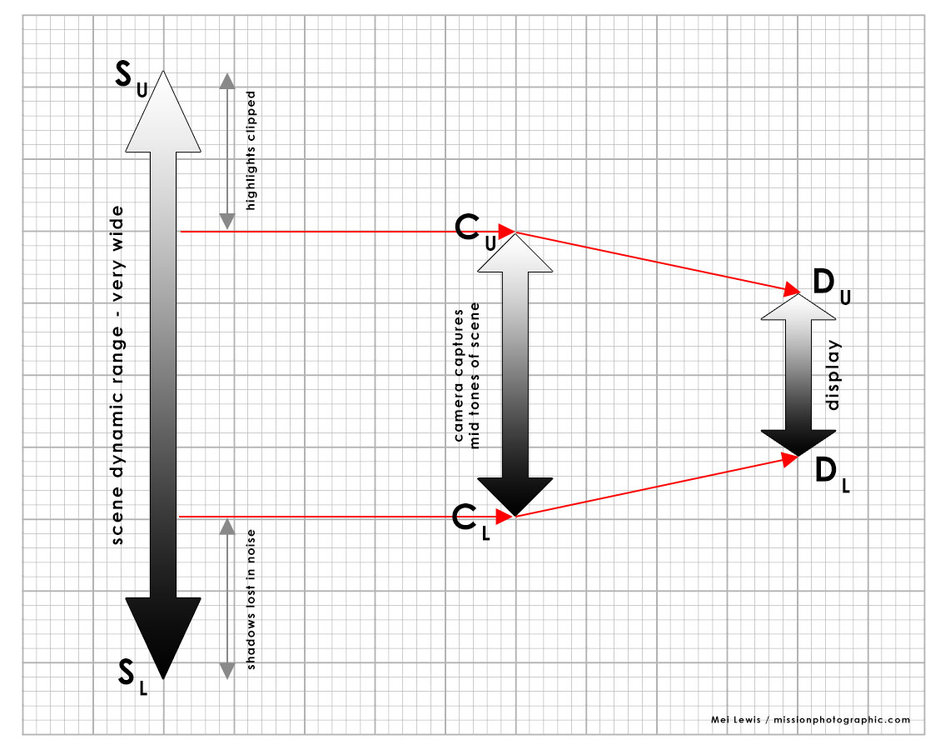

This is my current understanding of what's going on here. When an image is displayed white in the image data is usually mapped to white on the display, and black to black, so CU=DU and CL=DL, but that doesn't have to be the case. If CU is mapped some value higher than DU then clipping of whites happens. If CL is mapped to somewhere lower than DL then black clipping happens.

-

I agree with John, I definitely recommend shooting color and doing your own conversion. It gives you much more information to work with, and you'll probably find you'll want to do a slightly different conversion for each shot. And by shooting color and converting yourself, you can choose if and how much different colors contrast with each other when converted to black and white.

-

Yes, I can see a problem, motion is not always smooth. It's most obvious on wide, panning shots. I think Phil's explanation of randomly dropped shots covers it. If I export to a USB drive and play back on a TV there's no issue. I guess because the TV is designed for 25fps playback (and probably 30 and maybe 24 too).

-

I don't think that's quite right. There are 3 places along the process of image capture and display whose dynamic range we primarily have to worry about: 1) The scene we're filming - this could have very many stops of range 2) The camera (digital sensor or film) that is recording the scene - 9 or 15 stops say 3) The output image - 6 stops? Going from (1) to (2) anything outside the camera's dynamic range is lost. When the transition is made between (2) and (3) no tonal range of the image is necessarily lost (unless highlight or shadows are clipped for creative reasons or by mistake), but the range is scaled down to fit into the available dynamic range of the display. So a 9 stop camera would record 9 stops of the real scene, and those 9 stops would be shown as 6 stops on a typical display. A 15 stop camera would record 15 stops of the real scene, and those 15 stops would be shown as 6 stops on a typical display. The range between the brightest and darkest parts of the output picture is the same for both cameras, because they are both being shown on the same display. The difference is that the lower dynamic range (9 stops) camera won't have recorded as much into the highlights and shadows, so detail won't be visible there, and so the mid tones of the image are spread over much of the 6 stops of display range. For the 15 stop camera, all 15 stops have to fit into 6, so the mid range is compressed into fewer stops to allow the highlight and shadow stops to fit, and the mid range loses contrast. You *can* display HDR images on a normal dynamic range display. This is what is happening in the images you see if you google image search for "hdr photography". I think it's a pretty ugly look, as do many people, which is probably why it's not used very often, particularly for video. This is also basically what's happening when you look at log footage on a normal display without the appropriate LUT, and it looks very 'flat' (lacking contrast) .

-

Thanks Stuart. With the computer monitor, I'm not talking about filming it, but watching content on it. Any and all 24fps content is going to somehow miss or double frames as it's displayed. And I think 60Hz is a very common refresh rate for computer monitors, probably because of the 60Hz mains in the US and elsewhere.

-

Does anyone have any newer thoughts on this? I'm in the UK and I've always shot 25fps until now. I occasionally shoot stuff that ends up on TV where I'm pretty sure shooting at 25fps is still the best option, but should I shoot stuff that I know will only be seen on the web at 24fps? I have a related question which despite much research I've not been able to get a good answer to. Many computer monitors have refresh rates that aren't multiples of 24 or 25. My 3 monitors are all at 60Hz. This seems to mean I'm never really going to get smooth motion. Why isn't this talked about more online? Am I missing something?

-

Microsoft's Curved Sensor - Thinking Outside the Box

Mei Lewis replied to Tim Tyler's topic in General Discussion

I'm interested in what the optical character of newly designed lenses for curved sensors would be. How would the bokeh and flare look? Would there be other, perhaps pleasant, aberrations like the non-circular bokeh of anamorphics? -

I think that's a slightly old-fashion, hierarchichal way of looking at things. The only absolute requirement for movie making is film-makers, film viewers and some way for them to be connected. Based on what he's said, Bloomkamp isn't touting for work in any way, he's making his own work, as he wants to. In this are the movie industry is probably some way behind other entertainment, for example Valve becoming their own game distribution platform, bands releasing their own records, comedians making their own specials.

-

What does "+3 diopter" mean here please? I know a diopter is a type of glass lens element, but what is it's effect? Does it multiply the focal length by 3? Thanks.

-

how do you archive and store reference images and videos?

Mei Lewis replied to David Schuurman's topic in General Discussion

Lightroom is made to do just this sort of thing. As well as editing photos (and to a limited extent videos), it's main purpose is to organise and categorise photos and video. You can add labels, multiple tags or hierarchies of tags, and it deals with huge numbers of files reasonably well. -

Spectre mixing film and digital

Mei Lewis replied to Alex Birrell's topic in In Production / Behind the Scenes

In what inherent way does digital motion blur look different to film motion blur? Isn't it just down to how long the shutter is open? -

There's an article on the ASC website where the gaffer on the Dark Knight explains: "For close-up and medium shots of the couple, an Arri LoCaster LED with a 1'x1' soft-box snoot and interchangeable diffusion frames provided eyelight, and 5K tungsten Chimeras provided a soft edge. “We always tried to approach the eyelight from a complimentary angle to the camera,” says Geryak. “If the camera was over someone’s right shoulder, I’d stand over his left shoulder and try to wrap the light from the key side so it looked more natural.” https://www.theasc.com/ac_magazine/August2012/DarkKnightRises/page1.php

-

Removing the Lens during transpo

Mei Lewis replied to Tony Muna's topic in Camera Assistant / DIT & Gear

There are potential dangers with taking the lens off for transport too, like geting dust on the sensor, which wasn't so much of a problem with film. -

I've worked on a few smaller productions where people have used a tape measure to pre-measure distances to actors' marks so they can ocus to them during a take. From memory (it was a while ago) they measured from the mark on the camera to the actors' mark, so the line of measure is usually off at some angle from the perpendicular to the film plane. I think the distance scale on lenses specificy perpendicular distance between the film plane and the plane of focus. That would be shorter than the measured distance typically. Do focus pullers use trig to figure out the distance they should pull to on the lens, having only measured the direct distance? Or do they measure perpendicular distance?

-

I didn't put that very well. I didn't mean stagey in a negative way, just that it looks like it was shot on a stage. I definitely didn't mean stylized, because the whole trailer is _very_ stylized. I guess my real question is, assuming they've made the interiors look deliberately like they were shot on a stage/not in a real location, why have they done that? Is it some reference I don't get? Is that how a lot of classic westerns were shot?

-

the sutff in the cabin looks very stagey. A deliberate stylistic choice?

-

The 24-70 Mark 2 is an amazing lens and the flares look great. I wouldn;t worry about sharpeness, it's desigend for 30MP+ still imaging, far higher res requireemenets than the S4s.