-

Posts

28 -

Joined

-

Last visited

Everything posted by Tomasz Brodecki

-

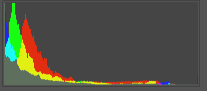

In your other examples, there was shadow detail that got deteriorated because of the compressed output. In this example, there was no shadow detail whatsoever. As for the "why" — any and every step of the pipeline could have contributed to this result — from a potentially underexposed original negative, through timing, printing, scanning and eventually preparing this digital copy that you're sharing (with its own bitdepth and bitrate limitations). Usually when a copy throws away half the potential dynamic range of the scene, we don't call it "good", but I understand that everything is subjective.

-

Sure, with careful placement and/or a bit of diffusion, the two 300s can be enough. I'm mostly going to advise lighting the background criss-crossed (right light shines on the left part of the background and vice versa), so that you avoid hot spots and provide a more even illumination.

-

It doesn't. You're probably describing output or preview files based on those RAW inputs. As long as you shoot uncompressed or lossless RAW, compressed blocks of pixels are not something that exists in your image, their existence depends solely on your export settings — and in that regard you should follow Phil's advice above.

-

It's 2021 and digital capture still looks like sh

Tomasz Brodecki replied to Karim D. Ghantous's topic in General Discussion

I suppose Karim was referring to the clipped highlight on the actual tail light, which turned white-yellow-red, not the red ghosting above it. What's worse, after grading (and "extinguishing" the blown out part), the white area turned slightly cyan. -

SONY FS5 1920X1080 50p - What's wrong???

Tomasz Brodecki replied to Alexandros Pissourios's topic in Camera Operating & Gear

That's exactly the case if you're going for an effective 180° shutter angle, as I've said above -

SONY FS5 1920X1080 50p - What's wrong???

Tomasz Brodecki replied to Alexandros Pissourios's topic in Camera Operating & Gear

No, to the recording speed — e.g. shooting 1/200th at 100fps or 1/100th at 50fps and slowing it down (so that no frame is skipped) results in the same motion ratio as shooting 1/50th for 25fps. And it looks like that's exactly what you achieved with the slowed down versions of your videos. I still fail to see the problem. The footage is definitely shaky, as in, unstabilized, but the shutter angle aspect looks alright to me (once slowed down and not frame-skipping). -

SONY FS5 1920X1080 50p - What's wrong???

Tomasz Brodecki replied to Alexandros Pissourios's topic in Camera Operating & Gear

I don't see the issue with the slowed down version, can you describe what exactly "looks shit" to you in this clip? Shutter angle math remains the same between 1:1 (real time) recording:playback and slow-motion, as long as you remember to match your footage to the playback frame rate (so that every frame is played and none is skipped) -

SONY FS5 1920X1080 50p - What's wrong???

Tomasz Brodecki replied to Alexandros Pissourios's topic in Camera Operating & Gear

The first clip you embedded looks alright, just what 50fps slowed down to 25 should look like when shot at 1/100 (so you're left with a 180° shutter angle). With the two shorter clips, it looks like you forgot to slow them down, so at 25fps you're only watching every other frame of your 50fps recording (skipping the rest), and that results in the "juddery" 90° shutter angle look. -

Anybody know what tripod is being used here?

Tomasz Brodecki replied to Blake Treharne's topic in Grip & Rigging

What Ed said. It's a newer Manfrotto 190, too large for BeFrees, too small for a 055. And the head on the bottom one is an older model of the 494RC2, not sure about the fluid head (although it's not a Manfrotto Live). I assume that on top of it is an iPhone, with what looks like Moondog Labs' anamorphic adapter. -

A scene solely with red lighting

Tomasz Brodecki replied to Viggo Söderberg's topic in Lighting for Film & Video

Yes, there's always that third option, but it's not exactly the best one for the planet ? Just to be clear — we're going to need more light for any scene intended for single-color result compared to an equivalent full-color one anyway (if we're going for a believable look of a single-color light source, because of the values that we will be substracting), but by using white we're left with more choices. There's a time and place for everything, and unless you have the colorist on set and/or performing final grading on the entire footage as you shoot, you will be making the ultimate color decisions in post production anyway, so the time on set is probably better spent focusing on the task at hand. The choice is up to you (or your DIT or your colorist, whoever prepares your LUT) as to how much you want it to use the blue and green values to be turned into reds and how much to be filtered out, or any specific point in between. -

A scene solely with red lighting

Tomasz Brodecki replied to Viggo Söderberg's topic in Lighting for Film & Video

Because of the Bayer filter array in front of your sensor, if you shoot using only blue or only red light, the effective sensitivity of the sensor is reduced by 75%, so in order to compensate for that difference, you'd have to bump your gain, along with the accompanying noise, by two stops (for green, that's 50% or one stop). In other words, if you're going for a monochromatic result and you're shooting digitally, use the most of the spectrum you can, shoot with a red LUT if you can, and save the final color decisions for grading. -

Do you guys use lightmeters?

Tomasz Brodecki replied to DanielSydney's topic in Lighting for Film & Video

I kind of expect the gaffer to be equipped with a light meter at all times, mostly so that he can take measurements without walking back and forth to the camera, but for me, from the image acquisition point of view, waveform monitor is the best light meter. And as for location scouting, everything there is to know about the luminance of the scene, you can learn from the scout photo exposure settings (after all, a DSLR or a mirrorless camera is a perfectly capable metering tool in itself, and I don't mean just its built-in metering that returns the f-stop value for a given shutter time and ISO, but also the millions of per-photosite measurements that it stores in every single photo). -

This was just sent my way, I think you guys may enjoy it as much as I have: And for those of you that speak German (or are willing to suffer through auto-translated subtitles), here's an interview with von BERG:

- 23 replies

-

- virtual production

- unreal engine

-

(and 3 more)

Tagged with:

-

For Oculus, there's https://creator.oculus.com/mrc/ and here's the guide for LIV, which is sort of its SteamVR counterpart, although developed independently and available as an add-on tool: https://help.liv.tv/hc/en-us I'm sorry about the double posts, unfortunately this forum doesn't allow edits (or even addendums) after more than 5 minutes ?

- 23 replies

-

- 1

-

-

- virtual production

- unreal engine

-

(and 3 more)

Tagged with:

-

The Vive Tracker that you linked serves a similar purpose in HTC/Steam's inside-out tracking solution (Vive headsets, controllers and trackers are equipped with light sensors that monitor where in the world they are by receiving flashes of lights from the oscillating illuminators, called Lighthouses, which you place in the corners of your space). Vive Tracker is exactly what I would be using if we were in the HTC hardware ecosystem. In our case, since we're using first generation Oculus hardware, the tracking works outside-in (cameras/light sensors are placed stationary in the corners of your space, while the controllers and headsets blink their unique identifiers using IR LEDs), so in a very similar way, just the opposite direction. That is correct, with the caveat that the computer generated image has to be rendered from exactly the viewport (position + rotation + field of view) that matches the position of the real-world camera (establishing which is the purpose of the camera-tracking solutions described above). If I mess up the calibration between the two, it results in less convincing parallax between real life talent and VR-based objects, like at 1:53 in the video below:

- 23 replies

-

- virtual production

- unreal engine

-

(and 3 more)

Tagged with:

-

We use the same tracking system that monitors the position (and rotation) of the headset and controllers that you see Michalina use in the video. In addition to those three objects, we add a fourth one (a controller from another set), that is fixed to the real-world recording camera, which is then recognized by the tracking sensors, providing its own position and rotation data in three dimensions, with no need for trackers or reference objects in the scene.

- 23 replies

-

- virtual production

- unreal engine

-

(and 3 more)

Tagged with:

-

You can say that ? Specifically, our green bedroom wall, using a yoga mat as a backdrop extension ? + I had those two slim 1m LED lights made to make the real world video match the in-game lighting a bit better ? I forgot to mention that even though the camera is tracked in 6 degrees of freedom by the computer (so moving it will result in a corresponding perspective shift in the plate), we haven't recorded a single video with a moving camera yet, because of practical limitations, but we're gearing up to finally do something about it ?

- 23 replies

-

- virtual production

- unreal engine

-

(and 3 more)

Tagged with:

-

Fun to see someone else approaching the mixture of physical and virtual reality, from a different direction than we have ? What you see below were my first experiments with moving pictures (having worked before both with Unity and 360° photography, so all the needed equipment and software was already at hand): Almost every video on this channel is an attempt at combining Unity-based real-time rendered surroundings with real-world capture, performed in a very DIY way, originally only to prove that it could be done (we did not expect the interest the project will gather).

- 23 replies

-

- virtual production

- unreal engine

-

(and 3 more)

Tagged with:

-

Arri Alexa classic vs the XT: major differences?

Tomasz Brodecki replied to omar robles's topic in ARRI

All ARRI cameras from the last 10 years use the same ALEV III image sensor (in a single, double or triple configuration, for S35, LF and 65), so you should not expect differences in the image itself between two ARRI cameras of the same format, especially if you record to ARRIRAW and not perform the actual image processing in-camera. https://www.arri.com/en/learn-help/technology/alev-sensors -

Those are apples and oranges, there are separate uses for small cameras (with their portability/low mass/usefulness in tight spaces) and large cameras (with complete control laid out on the body, multiple robust connectors, efficient cooling, displays, battery life etc.). Because they don't make a single product from the latter category above, so there is nothing to advance to and maintain compatibility, unlike what you can do with Sony (E) and Canon (EF) systems. And as for Z-mount lenses, you can't use them on cinema cameras (unlike F-mount lenses), because of the 16mm flange-focal-distance. I totally agree with this. Not to mention that even movies shot with LF nowadays end up using t-stops that negate the potential advantage (or disadvantage) in depth of focus.

-

Hi there, I also moved to DaVinci Resolve last year, mostly because of the built-in chroma-keying capabilities, and now it's the only NLE I use, both for clients' projects and our mixed reality channel. As for learning its basics, I can't recommend this thing enough:https://documents.blackmagicdesign.com/UserManuals/DaVinci-Resolve-16-Beginners-Guide.pdf My fiancée has just gone through the first two lessons and she definitely picked up the Cut page much quicker than I did the learn-as-I-go way.

-

Starting video head for DSLR - flat base or bowl

Tomasz Brodecki replied to Nick Burchell's topic in Camera Operating & Gear

Hi there, I also come from a photography background, so flat base was important for me in my first fluid head. Since all my existing heads and tripods were flat base Manfrotto products, I prioritized compatibility, with no regrets so far. Sure, the two solutions rely on different habits when it comes to leveling, but I suppose you already have some similar to mine, so it's not like using a flat-head tripod is currently a problem for you. And even though I'm currently shopping for a bowl tripod, I'm going to continue using my flat base heads (since you can easily adapt flat-to-bowl, which isn't that easy the other way round). Neither of the models you mention is a bad head, neither will stand in your way of achieving smooth motion (as I'm sure you've already found out during the week you've spent with your 608). Since you mention that Ikan riser, should I assume that you already own a flat-base slider? In time you'll probably start seeing the cases where you would benefit from a bowl setup (probably around the same time that the first annoyances of shooting with small cameras will show up), but for dual-purpose use, I'd still stay with flat bases. It might be an interesting topic to revisit after some time, when gearing up for a heavier camera or once video work dominates your shooting; for now it seems like you're already set up rather well for what you're going to do with your cameras. -

That's very interesting, thanks for the heads-up; I've been using it for most of 2020 without this showing up either in external or internal, and I was shooting outside at night, at 2°C, just this Sunday (you actually made me re-check my files, but they look alright, at least in that regard). I'll keep my eye out for that and, let me reiterate, I only recommend Nikon hardware to long-time Nikon owners, since as motion picture equipment, this product line is a dead end.